Ubuntu 22.10 in release notes brought one unpleasant news: “The option to install using zfs as a file system and encryption has been disabled due to a bug”. The official recommendation is to “simply” install Ubuntu 22.04 and upgrade from there. However, if you are willing to go over a lot of manual steps, that’s not necessary.

Those familiar with my previous installation guides will note two things. First, steps below will use LUKS encryption instead of the native ZFS option. I’ve been going back and forth on this one as I do like native ZFS encryption but in setups with hibernation enabled (and this one will be one of those), having both swap with LUKS and native ZFS would require user to type password twice. Not a great user experience.

Second, my personal preferences will “leak through”. This guide will clearly show dislike of snap system and use of way too big swap partition to facilitate hibernation under even the worst-case scenario. Your preferences might vary and thus you might want to adjust guide as necessary. The important part is disk and boot partition setup, everything else is just extra fluff.

Without further ado, let’s proceed with the install.

After booting into Ubuntu desktop installation (via “Try Ubuntu” option) we want to open a terminal. Since all further commands are going to need root credentials, we can start with that.

sudo -i

The very first step should be setting up a few variables - disk, hostname, and username. This way we can use them going forward and avoid accidental mistakes. Just make sure to replace these values with ones appropriate for your system. I like to use upper-case for ZFS pool as that’s what will appear as password prompt. It just looks nicer and ZFS doesn’t care either way.

DISK=/dev/disk/by-id/<diskid>

HOST=<hostname>

USER=<username>

For my setup I want 4 partitions. First two partitions will be unencrypted and in charge of booting. While I love encryption, I decided not to encrypt boot partition in order to make my life easier as you cannot integrate boot partition password prompt with the later data password prompt thus requiring you to type password twice. Both swap and ZFS partitions are fully encrypted.

Also, my swap size is way too excessive since I have 64 GB of RAM and I wanted to allow for hibernation under the worst of circumstances (i.e., when RAM is full). Hibernation usually works with much smaller partitions but I wanted to be sure and my disk was big enough to accommodate.

Lastly, while blkdiscard does nice job of removing old data from the disk, I would always recommend also using dd if=/dev/urandom of=$DISK bs=1M status=progress if your disk was not encrypted before.

blkdiscard -f $DISK

sgdisk --zap-all $DISK

sgdisk -n1:1M:+127M -t1:EF00 -c1:EFI $DISK

sgdisk -n2:0:+896M -t2:8300 -c2:Boot $DISK

sgdisk -n3:0:+65G -t3:8200 -c3:Swap $DISK

sgdisk -n4:0:0 -t4:8309 -c4:ZFS $DISK

sgdisk --print $DISK

Once partitions are created, we want to setup our LUKS encryption.

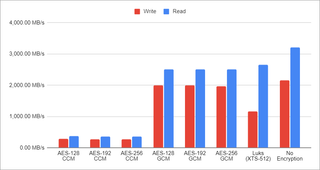

cryptsetup luksFormat -q --type luks2 \

--cipher aes-xts-plain64 --key-size 512 \

--pbkdf argon2i $DISK-part4

cryptsetup luksFormat -q --type luks2 \

--cipher aes-xts-plain64 --key-size 512 \

--pbkdf argon2i $DISK-part3

Since creating encrypted partition doesn’t mount them, we do need this as a separate step. Notice I use host name as the name of the main data partition.

cryptsetup luksOpen $DISK-part4 $HOST

cryptsetup luksOpen $DISK-part3 swap

Finally, we can setup our ZFS pool with an optional step of setting quota to roughly 80% of disk capacity. Adjust exact value as needed.

zpool create -o ashift=12 -o autotrim=on \

-O compression=lz4 -O normalization=formD \

-O acltype=posixacl -O xattr=sa -O dnodesize=auto -O atime=off \

-O canmount=off -O mountpoint=none -R /mnt/install ${HOST^} /dev/mapper/$HOST

zfs set quota=1.5T ${HOST^}

I used to be fan of using just a main dataset for everything but these days I use more conventional “separate root dataset” approach.

zfs create -o canmount=noauto -o mountpoint=/ ${HOST^}/Root

zfs mount ${HOST^}/Root

And a separate home partition will not be forgotted.

zfs create -o canmount=noauto -o mountpoint=/home ${HOST^}/Home

zfs mount ${HOST^}/Home

zfs set canmount=on ${HOST^}/Home

With all datasets in place, we can finish setting the main dataset properties.

zfs set devices=off ${HOST^}

Now it’s time to format swap.

mkswap /dev/mapper/swap

And then boot partition.

yes | mkfs.ext4 $DISK-part2

mkdir /mnt/install/boot

mount $DISK-part2 /mnt/install/boot

And finally EFI partition.

mkfs.msdos -F 32 -n EFI -i 4d65646f $DISK-part1

mkdir /mnt/install/boot/efi

mount $DISK-part1 /mnt/install/boot/efi

At this time, I also like to disable IPv6 as I’ve noticed that on some misconfigured IPv6 networks it takes ages to download packages. This step is both temporary (i.e., IPv6 is disabled only during installation) and fully optional.

sudo sysctl -w net.ipv6.conf.all.disable_ipv6=1

sudo sysctl -w net.ipv6.conf.default.disable_ipv6=1

sudo sysctl -w net.ipv6.conf.lo.disable_ipv6=1

To start the fun we need debootstrap package. Starting this step, you must be connected to the Internet.

apt update && apt install --yes debootstrap

Bootstrapping Ubuntu on the newly created pool comes next. This will take a while.

debootstrap $(basename `ls -d /cdrom/dists/*/ | grep -v stable | head -1`) /mnt/install/

We can use our live system to update a few files on our new installation.

echo $HOST > /mnt/install/etc/hostname

sed "s/ubuntu/$HOST/" /etc/hosts > /mnt/install/etc/hosts

sed '/cdrom/d' /etc/apt/sources.list > /mnt/install/etc/apt/sources.list

cp /etc/netplan/*.yaml /mnt/install/etc/netplan/

If you are installing via WiFi, you might as well copy your wireless credentials. Don’t worry if this returns errors - that just means you are not using wireless.

mkdir -p /mnt/install/etc/NetworkManager/system-connections/

cp /etc/NetworkManager/system-connections/* /mnt/install/etc/NetworkManager/system-connections/

Atlast, we’re ready to “chroot” into our new system.

mount --rbind /dev /mnt/install/dev

mount --rbind /proc /mnt/install/proc

mount --rbind /sys /mnt/install/sys

chroot /mnt/install \

/usr/bin/env DISK=$DISK USER=$USER \

bash --login

With our newly installed system running, let’s not forget to setup locale and time zone.

locale-gen --purge "en_US.UTF-8"

update-locale LANG=en_US.UTF-8 LANGUAGE=en_US

dpkg-reconfigure --frontend noninteractive locales

dpkg-reconfigure tzdata

Now we’re ready to onboard the latest Linux image.

apt update

apt install --yes --no-install-recommends linux-image-generic linux-headers-generic

Followed by the boot environment packages.

apt install --yes zfs-initramfs cryptsetup keyutils grub-efi-amd64-signed shim-signed

Now it’s time to setup crypttab so our encrypted partitions are decrypted on boot.

echo "$HOST UUID=$(blkid -s UUID -o value $DISK-part4) none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

echo "swap UUID=$(blkid -s UUID -o value $DISK-part3) none \

swap,luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

cat /etc/crypttab

To mount all those partitions, we need also some fstab entries. The last entry is not strictly needed. I just like to add it in order to hide our LUKS encrypted ZFS from file manager.

echo "PARTUUID=$(blkid -s PARTUUID -o value $DISK-part2) \

/boot ext4 noatime,nofail,x-systemd.device-timeout=5s 0 1" >> /etc/fstab

echo "PARTUUID=$(blkid -s PARTUUID -o value $DISK-part1) \

/boot/efi vfat noatime,nofail,x-systemd.device-timeout=5s 0 1" >> /etc/fstab

echo "UUID=$(blkid -s UUID -o value /dev/mapper/swap) \

none swap defaults 0 0" >> /etc/fstab

echo "/dev/disk/by-uuid/$(blkid -s UUID -o value /dev/mapper/$HOST) \

none auto nosuid,nodev,nofail 0 0" >> /etc/fstab

cat /etc/fstab

If hibernation is desired, a few settings need to be added.

sed -i 's/.*AllowSuspend=.*/AllowSuspend=yes/' \

/etc/systemd/sleep.conf

sed -i 's/.*AllowHibernation=.*/AllowHibernation=yes/' \

/etc/systemd/sleep.conf

sed -i 's/.*AllowSuspendThenHibernate=.*/AllowSuspendThenHibernate=yes/' \

/etc/systemd/sleep.conf

sed -i 's/.*HibernateDelaySec=.*/HibernateDelaySec=13min/' \

/etc/systemd/sleep.conf

Since we’re doing laptop setup, we can also setup lid switch. I like to set it as suspend-then-hibernate.

sed -i 's/.*HandleLidSwitch=.*/HandleLidSwitch=suspend-then-hibernate/' \

/etc/systemd/logind.conf

sed -i 's/.*HandleLidSwitchExternalPower=.*/HandleLidSwitchExternalPower=suspend-then-hibernate/' \

/etc/systemd/logind.conf

Lastly, I adjust swappiness a bit.

echo "vm.swappiness=10" >> /etc/sysctl.conf

And then, we can get grub going. Do note we also setup booting from swap (hibernation support) here too.

sed -i "s/^GRUB_CMDLINE_LINUX_DEFAULT.*/GRUB_CMDLINE_LINUX_DEFAULT=\"quiet splash \

nvme.noacpi=1 \

RESUME=UUID=$(blkid -s UUID -o value /dev/mapper/swap)\"/" \

/etc/default/grub

update-grub

grub-install --target=x86_64-efi --efi-directory=/boot/efi --bootloader-id=Ubuntu \

--recheck --no-floppy

update-initramfs -u -k all

Next step is to create boot environment.

KERNEL=`ls /usr/lib/modules/ | cut -d/ -f1 | sed 's/linux-image-//'`

update-initramfs -c -k $KERNEL

Finally, we can install our desktop environment.

apt install --yes ubuntu-desktop-minimal

Once installation is done, I like to remove snap and banish it from ever being installed.

apt remove --yes snapd

echo 'Package: snapd' > /etc/apt/preferences.d/snapd

echo 'Pin: release *' >> /etc/apt/preferences.d/snapd

echo 'Pin-Priority: -1' >> /etc/apt/preferences.d/snapd

Since Firefox is only available as snapd package, we can install it manually.

add-apt-repository --yes ppa:mozillateam/ppa

cat << 'EOF' | sed 's/^ //' | tee /etc/apt/preferences.d/mozillateamppa

Package: firefox*

Pin: release o=LP-PPA-mozillateam

Pin-Priority: 501

EOF

apt update && apt install --yes firefox

While at it, I might as well get Chrome too.

cd /tmp

wget --inet4-only https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb

apt install ./google-chrome-stable_current_amd64.deb

For Framework Laptop I use here, we need one more adjustment due to Dell audio needing a special care. Note that owners of Gen12 boards need a few more adjustments.

echo "options snd-hda-intel model=dell-headset-multi" >> /etc/modprobe.d/alsa-base.conf

Of course, we need to have a user too.

adduser --disabled-password --gecos '' $USER

usermod -a -G adm,cdrom,dialout,dip,lpadmin,plugdev,sudo,tty $USER

echo "$USER ALL=NOPASSWD:ALL" > /etc/sudoers.d/$USER

passwd $USER

I like to add some extra packages and do one final upgrade before calling it done.

add-apt-repository --yes universe

apt update && apt dist-upgrade --yes

It took a while but we can finally exit our debootstrap environment.

exit

Let’s clean all mounted partitions and get ZFS ready for next boot.

umount /mnt/install/boot/efi

umount /mnt/install/boot

mount | grep -v zfs | tac | awk '/\/mnt/ {print $3}' | xargs -i{} umount -lf {}

zpool export -a

With reboot, we should be done and our new system should boot with a password prompt.

reboot

Once we log into it, we need to adjust boot image and test hibernation. If you see your desktop after waking it up, all is good.

sudo update-initramfs -u -k all

sudo systemctl hibernate

If you get Failed to hibernate system via logind: Sleep verb "hibernate" not supported go into BIOS and disable secure boot (Enforce Secure Boot option). Unfortunately, secure boot and hibernation still don’t work together but there is some work in progress to make it happen in future. At this time, you need to select one or the other.

PS: If you already installed system with secure boot and hibernation is not working, run update-initramfs -c -k all and try again.

PPS: There are versions of this guide using the native ZFS encryption for other Ubuntu versions: 22.04, 21.10, and 20.04.

PPPS: For LUKS-based ZFS setup, check the following posts: 20.04, 19.10, 19.04, and 18.10.