I had an interesting thing said to me: “Did you know that modulo is much less efficient than bitwise comparison?” As someone who spent time I painstakingly went through all E-series resistor values to find those that would make my voltage divider be power of 2, I definitely saw that in action. But, that got me thinking. While 8-bit PIC microcontroller doesn’t have a hardware divider and thus any modulo is a torture, what about modern computers? How much slower do they get?

Quick search brought me a few hits and one conclusive StackOverflow answer. Searching a bit more brought me to another answer where they even did measurements. And difference was six-fold. But I was left with a bit of nagging as both of these were 10+ years old. What is a difference you might expect on a modern CPU? And, more importantly for me, what are differences in C#?

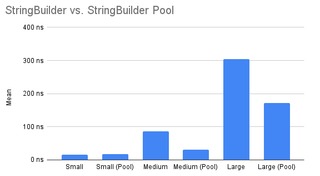

Well, I quickly ran some benchmarks and results are below.

| Test | Parallel | Mean | StDev |

|---|

| (i % 4) == 0 | No | 202.3 us | 0.24 us |

| (i & 0b11) == 0 | No | 201.9 us | 0.12 us |

| (i % 4) == 0 | CpuCount | 206.4 us | 7.78 us |

| (i & 0b11) == 0 | CpuCount | 196.5 us | 5.63 us |

| (i % 4) == 0 | CpuCount*2 | 563.9 us | 7.90 us |

| (i & 0b11) == 0 | CpuCount*2 | 573.9 us | 6.52 us |

My expectations were not only wrong but slightly confusing too.

As you can see from table above, I did 3 tests, single threaded, default parallel for, and then parallel for loop with CPU overcommitment. Single threaded test is where I saw what I expected but not in amount expected. Bitwise was quite consistently winning but by ridiculous margins. Unless I was doing something VERY specific, there is no chance I would care about the difference.

If we run test in Parallel.For, difference becomes slightly more obvious. And had I stayed just on those two, I would have said that assumption holds for modern CPUs too.

However, once I overcommitted CPU resources, suddely modulo was actually better. And that is something that’s hard to explain if we take assumption that modulo just uses divide to be true.

So, I decided to sneak a bit larger peek - into .NET CLR. And I discovered that bitwise operation was fully omitted while modulo operation was still there. However, then runtime smartly decided to remove both. Thus, I was testing nothing vs. almost nothing.

Ok, after I placed a strategic extra instructions to prevent optimization, I got the results below.

| Test | Parallel | Mean | StDev |

|---|

| (i % 4) == 0 | No | 203.1 us | 0.16 us |

| (i & 0b11) == 0 | No | 202.9 us | 0.06 us |

| (i % 4) == 0 | CpuCount | 1,848.6 us | 13.13 us |

| (i & 0b11) == 0 | CpuCount | 1,843.9 us | 6.76 us |

| (i % 4) == 0 | CpuCount*2 | 1,202.7 us | 7.32 us |

| (i & 0b11) == 0 | CpuCount*2 | 1,201.6 us | 6.75 us |

And yes, bitwise is indeed faster than modulo but by really low margin. The only thing new test “fixed” was that discrepancy in speed when you have too many threads.

Just to make extra sure that the compiler wasn’t doing “funny stuff”, I decompiled both to IL.

ldloc.1

ldc.i4.4

rem

ldc.i4.0

ceq

ldloc.1

ldc.i4.3

and

ldc.i4.0

ceq

Pretty much exactly the same, the only difference being usage of and for bitwise check while rem was used for modulo. In modern CPUs these two instructions seem pretty much equivalent. And when I say modern, I use that lossely since I saw the same going back a few generations .

Interestingly, just in case runtime changed those to the same code, I also checked modulo 10 just to confirm. That one was actually faster than modulo 4. That leads me to believe there are some nice optimizations happening here. But I still didn’t know if this was .NET framework or really something CPU does.

As a last resort, I went down to C and compiled it with -O0 -S. Unfortunately, even with -O0, if you use % 4, it will be converted for bitwise. Thus, I checked it against % 5.

Bitwise check compiled down to just 3 instructions (or just one if we exclude load and check).

movl -28(%rbp), %eax

andl $3, %eax

testl %eax, %eax

But modulo went crazy route.

movl -28(%rbp), %ecx

movslq %ecx, %rax

imulq $1717986919, %rax, %rax

shrq $32, %rax

movl %eax, %edx

sarl %edx

movl %ecx, %eax

sarl $31, %eax

subl %eax, %edx

movl %edx, %eax

sall $2, %eax

addl %edx, %eax

subl %eax, %ecx

movl %ecx, %edx

testl %edx, %edx

It converted division into multiplication and gets to remainder that way. All in all, quite impressive optimization. And yes, this occupies more memory so there are other consequences to the performance (e.g. uses more cache memory).

So, if you are really persistent with testint, difference does exist. It’s not six-fold but it can be noticeable.

At the end, do I care? Not really. Unless I am working on microcontrollers, I won’t stop using modulo where it makes sense. It makes intent much more clear and that, to me, is worth it. Even better, compilers will just take care of this for you.

So, while modulo is less efficient, stories of its slowness have been exaggerated a bit.

PS: If you want to run my tests on your system, files are available.