One reason why I was excited about Framework 16 was to get two NVMe slots. While I was slightly disappointed by the fact the second slot could only handle 2230 M.2 SSD (instead of the full size 2280), having two slots makes dual boot easier to deal with. Alternatively, for ZFS aficionados like myself, it allows for data mirroring.

With both M.2 slots filled, I decided to set up UEFI boot ZFS mirror with LUKS-based encryption. Yes, I know that native encryption exists on ZFS and it might even have some advantages when it comes to performance.

Another thing you’ll notice about my installation procedure is the number of manual steps. While you can use the normal installer and then add mirroring later, I generally like manual installation better as it gives me freedom to set up partitions as I like them.

Lastly, I am going with Ubuntu 23.10 which is not officially supported by Framework. I found it works for me, but your mileage may vary.

With that out of the way, we can start installation by booting from USB, going to terminal, and becoming root:

sudo -i

Now we can set up a few variables - disk, pool, host name, and user name. This way we can use them going forward and avoid accidental mistakes. Just make sure to replace these values with ones appropriate for your system.

DISK1=/dev/disk/by-id/<firstdiskid>

DISK2=/dev/disk/by-id/<seconddiskid>

POOL=mypool

HOST=myhost

USER=myuser

The general idea of my disk setup is to maximize the amount of space available for the pool with the minimum of supporting partitions. However, you will find these partitions are a bit larger than what you can see at other places - especially when it comes to the boot and swap partitions. You can reduce either but I found having them oversized is beneficial for future proofing. Also, I intentionally make both the EFI and boot partition share the same UUID. This will come in handy later. And yes, you need swap partition no matter how much RAM you have (unless you really hate the hibernation).

In either case, we can create them all:

DISK1_ENDSECTOR=$(( `blockdev --getsz $DISK1` / 2048 * 2048 - 2048 - 1 ))

DISK2_ENDSECTOR=$(( `blockdev --getsz $DISK2` / 2048 * 2048 - 2048 - 1 ))

blkdiscard -f $DISK1 2>/dev/null

sgdisk --zap-all $DISK1

sgdisk -n1:1M:+63M -t1:EF00 -c1:EFI $DISK1

sgdisk -n2:0:+1984M -t2:8300 -c2:Boot $DISK1

sgdisk -n3:0:+64G -t3:8200 -c3:Swap $DISK1

sgdisk -n4:0:$DISK1_ENDSECTOR -t4:8309 -c4:LUKS $DISK1

sgdisk --print $DISK1

PART1UUID=`blkid -s PARTUUID -o value $DISK1-part1`

PART2UUID=`blkid -s PARTUUID -o value $DISK1-part2`

blkdiscard -f $DISK2 2>/dev/null

sgdisk --zap-all $DISK2

sgdisk -n1:1M:+63M -t1:EF00 -c1:EFI -u1:$PART1UUID $DISK2

sgdisk -n2:0:+1984M -t2:8300 -c2:Boot -u2:$PART2UUID $DISK2

sgdisk -n3:0:+64G -t3:8200 -c3:Swap -u3:R $DISK2

sgdisk -n4:0:$DISK2_ENDSECTOR -t4:8309 -c4:LUKS -u4:R $DISK2

sgdisk --print $DISK2

Since I use LUKS, I get to encrypt my ZFS partition now.

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--perf-no_write_workqueue --perf-no_read_workqueue \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK1-part4

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--perf-no_write_workqueue --perf-no_read_workqueue \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK2-part4

Of course, encrypting swap is needed too. Here I use the same password as one I used for data. Why? Because that way you get to unlock them both with a single password prompt. Of course, if you wish, you can have different password too.

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--perf-no_write_workqueue --perf-no_read_workqueue \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK1-part3

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--perf-no_write_workqueue --perf-no_read_workqueue \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK2-part3

Now we decrypt all those partitions so we can fill them with sweet, sweet data.

cryptsetup luksOpen $DISK1-part4 ${DISK1##*/}-part4

cryptsetup luksOpen $DISK2-part4 ${DISK2##*/}-part4

cryptsetup luksOpen $DISK1-part3 ${DISK1##*/}-part3

cryptsetup luksOpen $DISK2-part3 ${DISK2##*/}-part3

Finally, we can create our mirrored pool and any datasets you might want. I usually have a few more but root (/) and home (/home) partition are minimum:

zpool create -o ashift=12 -o autotrim=on \

-O compression=lz4 -O normalization=formD \

-O acltype=posixacl -O xattr=sa -O dnodesize=auto -O atime=off \

-O canmount=off -O mountpoint=none -R /mnt/install \

$POOL mirror /dev/mapper/${DISK1##*/}-part4 /dev/mapper/${DISK2##*/}-part4

zfs create -o canmount=noauto -o mountpoint=/ \

-o reservation=64G \

${HOST^}/System

zfs mount ${HOST^}/System

zfs create -o canmount=noauto -o mountpoint=/home \

-o quota=128G \

${HOST^}/Home

zfs mount ${HOST^}/Home

zfs set canmount=on ${HOST^}/Home

zfs set devices=off ${HOST^}

Now we can format swap partition:

mkswap /dev/mapper/${DISK1##*/}-part3

mkswap /dev/mapper/${DISK2##*/}-part3

Assuming UEFI boot, I like to have ext4 partition here instead of more common ZFS pool as having encryption makes it overly complicated otherwise.

yes | mkfs.ext4 $DISK1-part2

mkdir /mnt/install/boot

mount $DISK1-part2 /mnt/install/boot/

Lastly, we need to format EFI partition:

mkfs.msdos -F 32 -n EFI -i 4d65646f $DISK1-part1

mkdir /mnt/install/boot/efi

mount $DISK1-part1 /mnt/install/boot/efi

And, only now we’re ready to copy system files. This will take a while.

apt update

apt install --yes debootstrap

debootstrap mantic /mnt/install/

Before using our newly copied system to finish installation, we can set a few files.

echo $HOST > /mnt/install/etc/hostname

sed "s/ubuntu/$HOST/" /etc/hosts > /mnt/install/etc/hosts

sed '/cdrom/d' /etc/apt/sources.list > /mnt/install/etc/apt/sources.list

cp /etc/netplan/*.yaml /mnt/install/etc/netplan/

Finally, we can login into our new semi-installed system using chroot:

mount --rbind /dev /mnt/install/dev

mount --rbind /proc /mnt/install/proc

mount --rbind /sys /mnt/install/sys

chroot /mnt/install /usr/bin/env \

DISK1=$DISK1 DISK2=$DISK2 HOST=$HOST USER=$USER \

bash --login

My next step is usually setting up locale and time-zone. Since I sometimes dual-boot, I found using local time in BIOS works the best.

locale-gen --purge "en_US.UTF-8"

update-locale LANG=en_US.UTF-8 LANGUAGE=en_US

dpkg-reconfigure --frontend noninteractive locales

ln -sf /usr/share/zoneinfo/PST8PDT /etc/localtime

dpkg-reconfigure -f noninteractive tzdata

echo UTC=no >> /etc/default/rc5

Now we’re ready to onboard the latest Linux image.

apt update

apt install --yes --no-install-recommends linux-image-generic linux-headers-generic

To allow for decrypting, we need to update crypttab:

echo "${DISK1##*/}-part4 $DISK1-part4 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

echo "${DISK1##*/}-part3 $DISK1-part3 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

echo "${DISK2##*/}-part4 $DISK2-part4 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

echo "${DISK2##*/}-part3 $DISK2-part3 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

cat /etc/crypttab

And, of course, all those drives need to be mounted too. Please note that the last two entries are not really needed, but I like to have them as it prevents Ubuntu from cluttering the taskbar otherwise.

echo "PARTUUID=$(blkid -s PARTUUID -o value $DISK1-part2) \

/boot ext4 nofail,noatime,x-systemd.device-timeout=3s 0 1" >> /etc/fstab

echo "PARTUUID=$(blkid -s PARTUUID -o value $DISK1-part1) \

/boot/efi vfat nofail,noatime,x-systemd.device-timeout=3s 0 1" >> /etc/fstab

echo "/dev/mapper/${DISK1##*/}-part3 \

swap swap nofail 0 0" >> /etc/fstab

echo "/dev/mapper/${DISK2##*/}-part3 \

swap swap nofail 0 0" >> /etc/fstab

echo "/dev/mapper/${DISK1##*/}-part4 \

none auto nofail,nosuid,nodev,noauto 0 0" >> /etc/fstab

echo "/dev/mapper/${DISK2##*/}-part4 \

none auto nofail,nosuid,nodev,noauto 0 0" >> /etc/fstab

cat /etc/fstab

Next we can proceed with setting up the boot environment:

apt install --yes zfs-initramfs cryptsetup keyutils grub-efi-amd64-signed shim-signed

KERNEL=`ls /usr/lib/modules/ | cut -d/ -f1 | sed 's/linux-image-//'`

update-initramfs -c -k all

To be able to actually use that boot environment, we install Grub too:

apt install --yes grub-efi-amd64-signed shim-signed

sed -i "s/^GRUB_CMDLINE_LINUX_DEFAULT.*/GRUB_CMDLINE_LINUX_DEFAULT=\"quiet splash \

RESUME=UUID=$(blkid -s UUID -o value /dev/mapper/${DISK1)\"/" \

/etc/default/grub

update-grub

grub-install --target=x86_64-efi --efi-directory=/boot/efi \

--bootloader-id=Ubuntu --recheck --no-floppy

With most of the system setup done, we get to install (minimum) Desktop packages:

apt install --yes ubuntu-desktop-minimal

To ensure the system wakes up with firewall, you can get iptables running:

apt install --yes man iptables iptables-persistent

iptables -F

iptables -X

iptables -Z

iptables -P INPUT DROP

iptables -P FORWARD DROP

iptables -P OUTPUT ACCEPT

iptables -A INPUT -i lo -j ACCEPT

iptables -A INPUT -m conntrack --ctstate ESTABLISHED,RELATED -j ACCEPT

iptables -A INPUT -p icmp -j ACCEPT

iptables -A INPUT -p tcp --dport 22 -j ACCEPT

ip6tables -F

ip6tables -X

ip6tables -Z

ip6tables -P INPUT DROP

ip6tables -P FORWARD DROP

ip6tables -P OUTPUT ACCEPT

ip6tables -A INPUT -i lo -j ACCEPT

ip6tables -A INPUT -m conntrack --ctstate ESTABLISHED,RELATED -j ACCEPT

ip6tables -A INPUT -p ipv6-icmp -j ACCEPT

ip6tables -A INPUT -p tcp --dport 22 -j ACCEPT

netfilter-persistent save

echo ; iptables -L ; echo; ip6tables -L

Some people like the snap packaging system and those people are wrong. If you are one of those that share this belief, you can remove snap too:

apt remove --yes snapd

echo 'Package: snapd' > /etc/apt/preferences.d/snapd

echo 'Pin: release *' >> /etc/apt/preferences.d/snapd

echo 'Pin-Priority: -1' >> /etc/apt/preferences.d/snapd

Since our snap removal also got rid of Firefox, we can add it manually:

add-apt-repository --yes ppa:mozillateam/ppa

cat << 'EOF' | sed 's/^ //' | tee /etc/apt/preferences.d/mozillateamppa

Package: firefox*

Pin: release o=LP-PPA-mozillateam

Pin-Priority: 501

EOF

apt update && apt install --yes firefox

To have a bit wider software selection, adding universe repo comes in handy:

add-apt-repository --yes universe

apt update

And, since Framework 16 is AMD-based, adding AMD PPA is a must:

add-apt-repository --yes ppa:superm1/ppd

apt update

Also, since Framework 16 is new, we need to update the keyboard definition too (this step might not be necessary in the future):

cat << EOF | sudo tee -a /usr/share/libinput/50-framework.quirks

[Framework Laptop 16 Keyboard Module]

MatchName=Framework Laptop 16 Keyboard Module*

MatchUdevType=keyboard

MatchDMIModalias=dmi:*svnFramework:pnLaptop16*

AttrKeyboardIntegration=internal

EOF

Fully optional is also setup for hibernation. It starts with setting up the sleep configuration:

sed -i 's/.*AllowSuspend=.*/AllowSuspend=yes/' \\

/etc/systemd/sleep.conf

sed -i 's/.*AllowHibernation=.*/AllowHibernation=yes/' \\

/etc/systemd/sleep.conf

sed -i 's/.*AllowSuspendThenHibernate=.*/AllowSuspendThenHibernate=yes/' \\

/etc/systemd/sleep.conf

sed -i 's/.*HibernateDelaySec=.*/HibernateDelaySec=13min/' \\

/etc/systemd/sleep.conf

And continues with button setup:

apt install -y pm-utils

sed -i 's/.*HandlePowerKey=.*/HandlePowerKey=hibernate/' \\

/etc/systemd/logind.conf

sed -i 's/.*HandleLidSwitch=.*/HandleLidSwitch=suspend-then-hibernate/' \\

/etc/systemd/logind.conf

sed -i 's/.*HandleLidSwitchExternalPower=.*/HandleLidSwitchExternalPower=suspend-then-hibernate/' \\

/etc/systemd/logind.conf

With all system stuff done, we finally get to create our new user:

adduser --disabled-password --gecos '' -u $USERID $USER

usermod -a -G adm,cdrom,dialout,dip,lpadmin,plugdev,sudo,tty $USER

echo "$USER ALL=NOPASSWD:ALL" > /etc/sudoers.d/$USER

passwd $USER

Now we can exit back into the installer:

exit

Don’t forget to properly clean our mount points in order to have the system boot:

umount /mnt/install/boot/efi

umount /mnt/install/boot

mount | grep -v zfs | tac | awk '/\/mnt/ {print $3}' | xargs -i{} umount -lf {}

zpool export -a

Finally, we are just a reboot away from success:

reboot

Once we login, there are just a few finishing touches. For example, I like to increase text size:

gsettings set org.gnome.desktop.interface text-scaling-factor 1.25

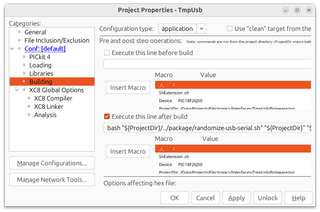

If you still remember where we started, you’ll notice that, while data is mirrored, our EFI and boot partition are not. My preferred way of keeping them in sync is by using my own utility named syncbootpart:

wget -O- http://packages.medo64.com/keys/medo64.asc | sudo tee /etc/apt/trusted.gpg.d/medo64.asc

echo "deb http://packages.medo64.com/deb stable main" | sudo tee /etc/apt/sources.list.d/medo64.list

sudo apt-get update

sudo apt-get install -y syncbootpart

sudo syncbootpart

sudo update-initramfs -u -k all

sudo update-grub

This utility will find what your currently used boot and EFI partition are and copy it to the second disk (using UUID in order to match them). And, every time a new kernel is installed, it will copy it to the second disk too. Since both disks share UUID, BIOS will boot from whatever it finds first and you can lose either drive while preserving your “bootability”.

At last, with all manual steps completed, we can enjoy our new system.