ZFS was, and still is, the primary driver for my Linux adventures. Be it snapshots or seamless data restoration, once you go ZFS it’s really hard to go back. And to get the full benefits of ZFS setup you need at least two drives. Since my Framework 16 came with two drives, the immediate idea was to setup ZFS mirror.

While Framework 16 does have two NVMe drives, they are not the same. One of them is full-size M.2 2280 slot and that’s the one I love. The other one is rather puny 2230 in size. Since M.2 2230 SSDs are limited to 2 TB in size, that also puts an upper limit on our mirror size. However, I still decided to combine it with a 4 TB drive.

My idea for setup is as follows: I match the smaller drive partitioning exactly so I can have myself as much mirrored disk space as possible. Leftover space I get to use for files that are more forgiving when it comes to a data loss.

I also wanted was a full disk encryption using LUKS (albeit most of the steps work if you have native ZFS encryption too). Since this is a laptop, I definitely wanted hibernation support too as it makes life much easier.

Now, easy and smart approach might be to use Ubuntu’s ZFS installer directly and let it sort everything out. And let nobody tell you anything is wrong with that. However, I personally like a bit more controlled approach that requires a lot of manual steps. And no, I don’t remember them by heart - I just do a lot of copy/paste.

With that out of the way, let’s go over the necessary steps.

The first step is to boot into the “Try Ubuntu” option of the USB installation. Once we have a desktop, we want to open a terminal. And, since all further commands are going to need root access, we can start with that.

sudo -i

Next step should be setting up a few variables - disk, pool name, hostname, and username. This way we can use them going forward and avoid accidental mistakes. Just make sure to replace these values with ones appropriate for your system.

DISK1=/dev/disk/by-id/<disk1>

DISK2=/dev/disk/by-id/<disk2>

HOST=<hostname>

USERNAME=<username>

On a smaller drive I wanted 3 partitions. The first two partitions are unencrypted and in charge of booting. While I love encryption, I almost never encrypt the boot partition in order to make my life easier as you cannot seamlessly integrate the boot partition password prompt with the later password prompt thus requiring you to type the password twice (or thrice if you decide to use native ZFS encryption on top of that). Third partition would be encrypted and take the rest of the drive.

On bigger drive I decided to have 5 partitions. First three would match the smaller drive. Fourth partition is 96 GB swap in order to accommodate full the worst case scenario. Realistically, even though my laptop has 96 GB of RAM, I could have gone with a smaller swap partition but I decided to reserve this space for potential future adventures. The last partition will be for extra non-mirrored data.

All these requirements come in the following few partitioning commands:

DISK1_LASTSECTOR=$(( `blockdev --getsz $DISK1` / 2048 * 2048 - 2048 - 1 ))

DISK2_LASTSECTOR=$(( `blockdev --getsz $DISK2` / 2048 * 2048 - 2048 - 1 ))

blkdiscard -f $DISK1 2>/dev/null

sgdisk --zap-all $DISK1

sgdisk -n1:1M:+127M -t1:EF00 -c1:EFI $DISK1

sgdisk -n2:0:+1920M -t2:8300 -c2:Boot $DISK1

sgdisk -n3:0:$DISK1_LASTSECTOR -t3:8309 -c3:LUKS $DISK1

sgdisk --print $DISK1

PART1UUID=`blkid -s PARTUUID -o value $DISK1-part1`

PART2UUID=`blkid -s PARTUUID -o value $DISK1-part2`

blkdiscard -f $DISK2 2>/dev/null

sgdisk --zap-all $DISK2

sgdisk -n1:1M:+127M -t1:EF00 -c1:EFI -u1:$PART1UUID $DISK2

sgdisk -n2:0:+1920M -t2:8300 -c2:Boot -u2:$PART2UUID $DISK2

sgdisk -n3:0:$DISK1_LASTSECTOR -t3:8309 -c3:LUKS -u3:R $DISK2

sgdisk -n4:0:+96G -t4:8200 -c4:Swap -u4:R $DISK2

sgdisk -n5:0:$DISK2_LASTSECTOR -t5:8309 -c5:LUKS $DISK2

sgdisk --print $DISK2

And yes, using the same partition UUIDs for boot drives is important and we’ll use it later to have a mirror of our boot data too.

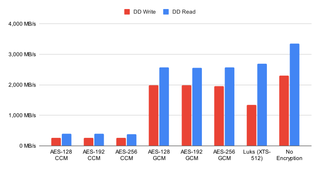

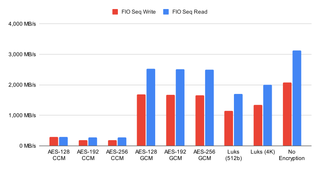

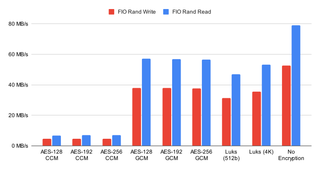

The next step is to setup all LUKS partitions. If you paid attention, that means we need to repeat formatting a total of 4 times. Unless you want to deal with multiple password prompts, make sure to use the same password for each:

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK1-part3

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK2-part3

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK2-part4

cryptsetup luksFormat -q --type luks2 \

--sector-size 4096 \

--cipher aes-xts-plain64 --key-size 256 \

--pbkdf argon2i $DISK2-part5

Since creating encrypted partitions doesn’t mount them, we do need this as a separate step. I like to name my LUKS devices based on partition names so we can recognize them more easily:

cryptsetup luksOpen \

--persistent --allow-discards \

--perf-no_write_workqueue --perf-no_read_workqueue \

$DISK1-part3 ${DISK1##*/}-part3

cryptsetup luksOpen \

--persistent --allow-discards \

--perf-no_write_workqueue --perf-no_read_workqueue \

$DISK2-part3 ${DISK2##*/}-part3

cryptsetup luksOpen \

--persistent --allow-discards \

--perf-no_write_workqueue --perf-no_read_workqueue \

$DISK2-part4 ${DISK2##*/}-part4

cryptsetup luksOpen \

--persistent --allow-discards \

--perf-no_write_workqueue --perf-no_read_workqueue \

$DISK2-part5 ${DISK2##*/}-part5

Finally, we can set up our mirrored ZFS pool with an optional step of setting quota to roughly 85% of disk capacity. Since we’re using LUKS, there’s no need to setup any ZFS keys. Name of the mirrored pool will match name of the host and it will contain several datasets to start with. It’s a good starting point, adjust as needed:

zpool create -o ashift=12 -o autotrim=on \

-O compression=lz4 -O normalization=formD \

-O acltype=posixacl -O xattr=sa -O dnodesize=auto -O atime=off \

-O quota=1600G \

-O canmount=off -O mountpoint=none -R /mnt/install \

${HOST^} mirror /dev/mapper/${DISK1##*/}-part3 /dev/mapper/${DISK2##*/}-part3

zfs create -o canmount=noauto -o mountpoint=/ \

-o reservation=100G \

${HOST^}/System

zfs mount ${HOST^}/System

zfs create -o canmount=noauto -o mountpoint=/home \

${HOST^}/Home

zfs mount ${HOST^}/Home

zfs set canmount=on ${HOST^}/Home

zfs create -o canmount=noauto -o mountpoint=/Data \

${HOST^}/Data

zfs set canmount=on ${HOST^}/Data

zfs set devices=off ${HOST^}

Of course, we can also setup our extra non-mirrored pool:

zpool create -o ashift=12 -o autotrim=on \

-O compression=lz4 -O normalization=formD \

-O acltype=posixacl -O xattr=sa -O dnodesize=auto -O atime=off \

-O quota=1600G \

-O canmount=on -O mountpoint=/Extra \

${HOST^}Extra /dev/mapper/${DISK2##*/}-part5

With ZFS done, we might as well setup boot, EFI, and swap partitions too. Any yes, we don’t have mirrored boot and EFI at this time; we’ll sort that out later.

yes | mkfs.ext4 $DISK1-part2

mkdir /mnt/install/boot

mount $DISK1-part2 /mnt/install/boot/

mkfs.msdos -F 32 -n EFI -i 4d65646f $DISK1-part1

mkdir /mnt/install/boot/efi

mount $DISK1-part1 /mnt/install/boot/efi

mkswap /dev/mapper/${DISK2##*/}-part4

At this time, I also sometimes disable IPv6 as I’ve noticed that on some misconfigured IPv6 networks it takes ages to download packages. This step is both temporary (i.e., IPv6 is disabled only during installation) and fully optional.

sysctl -w net.ipv6.conf.all.disable_ipv6=1

sysctl -w net.ipv6.conf.default.disable_ipv6=1

sysctl -w net.ipv6.conf.lo.disable_ipv6=1

To start the fun we need to debootstrap our OS. As of this step, you must be connected to the Internet.

apt update

apt dist-upgrade --yes

apt install --yes debootstrap

debootstrap noble /mnt/install/

We can use our live system to update a few files on our new installation:

echo $HOST > /mnt/install/etc/hostname

sed "s/ubuntu/$HOST/" /etc/hosts > /mnt/install/etc/hosts

rm /mnt/install/etc/apt/sources.list

cp /etc/apt/sources.list.d/ubuntu.sources /mnt/install/etc/apt/sources.list.d/ubuntu.sources

cp /etc/netplan/*.yaml /mnt/install/etc/netplan/

At last, we’re ready to chroot into our new system.

mount --rbind /dev /mnt/install/dev

mount --rbind /proc /mnt/install/proc

mount --rbind /sys /mnt/install/sys

chroot /mnt/install /usr/bin/env \

DISK1=$DISK1 DISK2=$DISK2 HOST=$HOST USERNAME=$USERNAME \

bash --login

With our newly installed system running, let’s not forget to set up locale and time zone.

locale-gen --purge "en_US.UTF-8"

update-locale LANG=en_US.UTF-8 LANGUAGE=en_US

dpkg-reconfigure --frontend noninteractive locales

ln -sf /usr/share/zoneinfo/America/Los_Angeles /etc/localtime

dpkg-reconfigure -f noninteractive tzdata

Now we’re ready to onboard the latest Linux image.

apt update

apt install --yes --no-install-recommends \

linux-image-generic linux-headers-generic

Now we set up crypttab so our encrypted partitions are decrypted on boot.

echo "${DISK1##*/}-part3 $DISK1-part3 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

echo "${DISK2##*/}-part3 $DISK2-part3 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

echo "${DISK2##*/}-part4 $DISK2-part4 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

echo "${DISK2##*/}-part5 $DISK2-part5 none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

cat /etc/crypttab

To mount all those partitions, we also need some fstab entries. ZFS entries are not strictly needed. I just like to add them in order to hide our LUKS encrypted ZFS from the file manager:

echo "PARTUUID=$(blkid -s PARTUUID -o value $DISK1-part2) \

/boot ext4 noatime,nofail,x-systemd.device-timeout=3s 0 1" >> /etc/fstab

echo "PARTUUID=$(blkid -s PARTUUID -o value $DISK1-part1) \

/boot/efi vfat noatime,nofail,x-systemd.device-timeout=3s 0 1" >> /etc/fstab

echo "/dev/mapper/${DISK1##*/}-part3 \

none auto nofail,nosuid,nodev,noauto 0 0" >> /etc/fstab

echo "/dev/mapper/${DISK2##*/}-part3 \

none auto nofail,nosuid,nodev,noauto 0 0" >> /etc/fstab

echo "/dev/mapper/${DISK2##*/}-part4 \

swap swap nofail 0 0" >> /etc/fstab

echo "/dev/mapper/${DISK2##*/}-part5 \

none auto nofail,nosuid,nodev,noauto 0 0" >> /etc/fstab

cat /etc/fstab

On systems with a lot of RAM, I like to adjust memory settings a bit. This is inconsequential in the grand scheme of things, but I like to do it anyway.

echo "vm.swappiness=10" >> /etc/sysctl.conf

echo "vm.min_free_kbytes=1048576" >> /etc/sysctl.conf

Now we can create the boot environment:

apt install --yes zfs-initramfs cryptsetup keyutils grub-efi-amd64-signed shim-signed

update-initramfs -c -k all

And then, we can get grub going. Do note we also set up booting from swap (needed for hibernation) here too. If you’re using secure boot, bootloaded-id HAS to be Ubuntu.

apt install --yes grub-efi-amd64-signed shim-signed

sed -i "s/^GRUB_CMDLINE_LINUX_DEFAULT.*/GRUB_CMDLINE_LINUX_DEFAULT=\"quiet splash \

RESUME=UUID=$(blkid -s UUID -o value /dev/mapper/${DISK2)\"/" \

/etc/default/grub

update-grub

grub-install --target=x86_64-efi --efi-directory=/boot/efi \

--bootloader-id=Ubuntu --recheck --no-floppy

I don’t like snap so I preemptively banish it from ever being installed:

apt remove --yes snapd 2>/dev/null

echo 'Package: snapd' > /etc/apt/preferences.d/snapd

echo 'Pin: release *' >> /etc/apt/preferences.d/snapd

echo 'Pin-Priority: -1' >> /etc/apt/preferences.d/snapd

apt update

And now, finally, we can install our desktop environment.

apt install --yes ubuntu-desktop-minimal man

Since Firefox is a snapd package (banished), we can install it manually:

add-apt-repository --yes ppa:mozillateam/ppa

cat << 'EOF' | sed 's/^ //' | tee /etc/apt/preferences.d/mozillateamppa

Package: firefox*

Pin: release o=LP-PPA-mozillateam

Pin-Priority: 501

EOF

apt update && apt install --yes firefox

Chrome aficionados, can install it too:

pushd /tmp

wget --inet4-only https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb

apt install ./google-chrome-stable_current_amd64.deb

popd

If you still remember the start of this post, we are yet to mirror our boot and EFI partition. For this, I have a small utility we might as well install now:

wget -O- http://packages.medo64.com/keys/medo64.asc | sudo tee /etc/apt/trusted.gpg.d/medo64.asc

echo "deb http://packages.medo64.com/deb stable main" | sudo tee /etc/apt/sources.list.d/medo64.list

apt update

apt install -y syncbootpart

syncbootpart

With Framework 16, there are no mandatory changes you need to do in order to have the system working. That said, I still like to do a few changes; the first of them is to allow trim operation on expansion cards:

cat << EOF | tee /etc/udev/rules.d/42-framework-storage.rules

ACTION=="add|change", SUBSYSTEM=="scsi_disk", ATTRS{idVendor}=="13fe", ATTRS{idProduct}=="6500", ATTR{provisioning_mode}:="unmap"

ACTION=="add|change", SUBSYSTEM=="scsi_disk", ATTRS{idVendor}=="32ac", ATTRS{idProduct}=="0005", ATTR{provisioning_mode}:="unmap"

ACTION=="add|change", SUBSYSTEM=="scsi_disk", ATTRS{idVendor}=="32ac", ATTRS{idProduct}=="0010", ATTR{provisioning_mode}:="unmap"

EOF

Since we’re doing hibernation, we might as well disable some wake up events that might interfere. I explain the exact process in another blog post but suffice it to say, this works for me:

cat << EOF | sudo tee /etc/udev/rules.d/42-disable-wakeup.rules

ACTION=="add", SUBSYSTEM=="i2c", DRIVER=="i2c_hid_acpi", ATTRS{name}=="PIXA3854:00", ATTR{power/wakeup}="disabled"

ACTION=="add", SUBSYSTEM=="pci", DRIVER=="xhci_hcd", ATTRS{subsystem_device}=="0x0001", ATTRS{subsystem_vendor}=="0xf111", ATTR{power/wakeup}="disabled"

ACTION=="add", SUBSYSTEM=="serio", DRIVER=="atkbd", ATTR{power/wakeup}="disabled"

ACTION=="add", SUBSYSTEM=="usb", DRIVER=="usb", ATTR{power/wakeup}="disabled"

EOF

[2025-01-11] Probably better way is creating a small pre-hibernate script that will hunt down these devices:

cat << EOF | sudo tee /usr/lib/systemd/system-sleep/framework

#!/bin/sh

case \$1 in

pre)

for DRIVER_LINK in \$(find /sys/devices/ -name "driver" -print); do

DEVICE_PATH=\$(dirname \$DRIVER_LINK)

if [ ! -f "\$DEVICE_PATH/power/wakeup" ]; then continue; fi

DRIVER=\$( basename \$(readlink -f \$DRIVER_LINK) )

if [ "\$DRIVER" = "button" ] || [ "\$DRIVER" = "i2c_hid_acpi" ] || [ "\$DRIVER" = "xhci_hcd" ]; then

echo disabled > \$DEVICE_PATH/power/wakeup

fi

done

;;

esac

EOF

sudo chmod +x /usr/lib/systemd/system-sleep/framework

For hibernation I like to change sleep settings so that hibernation kicks in after 13 minutes of sleep:

sed -i 's/.*AllowSuspend=.*/AllowSuspend=yes/' \

/etc/systemd/sleep.conf

sed -i 's/.*AllowHibernation=.*/AllowHibernation=yes/' \

/etc/systemd/sleep.conf

sed -i 's/.*AllowSuspendThenHibernate=.*/AllowSuspendThenHibernate=yes/' \

/etc/systemd/sleep.conf

sed -i 's/.*HibernateDelaySec=.*/HibernateDelaySec=13min/' \

/etc/systemd/sleep.conf

For that we also need to do a minor lid switch configuration adjustment:

apt install -y pm-utils

sed -i 's/.*HandlePowerKey=.*/HandlePowerKey=hibernate/' \

/etc/systemd/logind.conf

sed -i 's/.*HandleLidSwitch=.*/HandleLidSwitch=suspend-then-hibernate/' \

/etc/systemd/logind.conf

sed -i 's/.*HandleLidSwitchExternalPower=.*/HandleLidSwitchExternalPower=suspend-then-hibernate/' \

/etc/systemd/logind.conf

Lastly, we need to have a user too.

adduser --disabled-password --gecos '' $USERNAME

usermod -a -G adm,cdrom,dialout,dip,lpadmin,plugdev,sudo,tty $USERNAME

echo "$USER ALL=NOPASSWD:ALL" > /etc/sudoers.d/$USERNAME

passwd $USERNAME

It took a while, but we can finally exit our debootstrap environment:

exit

Let’s clean all mounted partitions and get ZFS ready for next boot:

sync

umount /mnt/install/boot/efi

umount /mnt/install/boot

mount | grep -v zfs | tac | awk '/\/mnt/ {print $3}' | xargs -i{} umount -lf {}

zpool export -a

After reboot, we should be done and our new system should boot with a password prompt.

reboot

Once we log into it, I like to first increase text size a bit:

gsettings set org.gnome.desktop.interface text-scaling-factor 1.25

Now we can also test hibernation:

sudo systemctl hibernate

If you get Failed to hibernate system via logind: Sleep verb "hibernate" not supported, go into BIOS and disable secure boot (Enforce Secure Boot option). Unfortunately, the secure boot and hibernation still don’t work together but there is some work in progress to make it happen in the future. At this time, you need to select one or the other.

Assuming all works nicely, we can get firmware updates going:

fwupdmgr enable-remote -y lvfs-testing

fwupdmgr refresh

fwupdmgr update

And that’s it - just half a thousand steps and you have Ubuntu 24.04 with a ZFS mirror.