TimeSpan and HH:mm

Time printing and parsing is always “fun” regardless of language but, for most of time C#, actually has it much better than one would expect. However, sometime one can get surprised by small details.

One application had the following (extremely simplified) code writing time entries:

var text = someDate.ToString("HH:mm", CultureInfo.InvariantCulture);Other application had the (also simplified) parsing code:

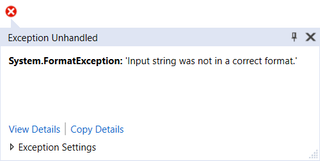

var someTime = TimeSpan.ParseExact(text, "HH:mm", CultureInfo.InvariantCulture);And all I got was System.FormatException: 'Input string was not in a correct format.'

Issue was not in text, all entries were valid time. Nope, issue was in a slight difference between custom DataTime and custom TimeSpan specifiers.

Reading documentation it’s really easy to note the first error as these is a clear warning: “The custom TimeSpan format specifiers do not include placeholder separator symbols.” In short that means we cannot use colon (:) as a TimeSpan separator. Nope, we need to escape it using backslash (\) character. I find this one mildly annoying as not implementing time separators into TimeSpan seems like a pretty basic functionality.

But there is also a second error that’s be invisible if you’re not watching carefully. TimeSpan has no knowledge of H specifier. It understands only its lowercase brother h. I would personally argue this is wrong. If any of those two had to be used, it should have been H as it denotes time more uniquely.

In any case, the corrected code was as follows:

var someTime = TimeSpan.ParseExact(text, "hh\\:mm", CultureInfo.InvariantCulture);Ideally one would always want to use the same format for output as the input but just because semantics of one class are the same as the other for your use case, you cannot count of format specifier to be the same.