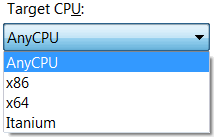

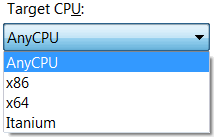

There is one decision that every .NET developer needs to face - which target platform should I use?

From my experience most developers leave it on AnyCPU. This usually works just fine (at least on their machine ;)) but understanding option could make all the difference.

x86

This is our 32-bit processor. If we run it on 32-bit OS, it is 32-bit application. If we run it on 64-bit processor, it still runs as 32-bit application (WOW64 takes care of that). It’s whole world fits inside of 32 bits no matter what underlying platform is capable of. I would dare to say that this world may be your wisest choice if you need to play with old technology.

x64

This is what we call 64-bit processor these days. AMD calls it “AMD64”, Intel thinks of it as “Intel 64”, but essentially, it is same architecture. It doesn’t run on 32-bit processor or 32-bit OS. Everything needs to be 64 bits in order to fit. It will not work faster, it will consume more memory, but it is our future. This big boy doesn’t play well with his 32-bit friends.

Itanium

Someone at Intel had a thought: let’s have pure and modern 64-bit design without any compatibility worries. Let’s make it work as fast and effectively as possible. IA64 architecture was born. Unfortunately, it was born in wrong age. 32-bit applications had ruled the world back then and this processor was very slow in x86 compatibility mode. This one is lone wolf - doesn’t play well with neither x86 or x64 programs.

AnyCPU

This is chameleon of all targets. If you run it on 32-bit system, it will work as x86. If you run it on x64, you get program that looks like it was compiled for it. Itanium looks native to it also. But (there is always a but), it’s process has same limitations of platform it is pretending to be.

This is done through miracles of IL which emits instructions for whatever underlying architecture really is.

There is also a small price to pay. If you, as a programmer, work on 32-bit system, all your calls to non-.NET world (e.g. Win32 API) are done in 32-bits. However, if you run your program on 64-bit system, all your pointers get 64-bit treatment. For those not watching carefully (e.g. using integer where pointer is needed), world may end up collapsing.

This problem is more visible in VB.NET than C# because of code reuse (and they told you that is a good thing). Lot of VB.NET programmers have background in VB 6 and there is lot of API code written for it. Problem was that there were no pointers there. People used Longs instead of pointer - both of them were 32 bit numbers. Everything worked. Then VB.NET came and long became integer, and everything still worked. At least in 32-bit world. When executed as 64-bit process (either target was x64 or AnyCPU) problem occurred since there was four bytes per pointer missing. Those bugs can be sometimes very hard to catch.

Which one to use

I use AnyCPU unless I need to work with some component that has no 64-bit version. I know that 64-bit processes use more memory (all pointers are double in size) and there is no real speed benefit, but if customer opted to have 64-bit OS, he had some reason for it.

I see no point in overriding his choice and avoiding future. I view it as 16-bit to 32-bit transfer. Not all programs needed it, but it was inevitable. Just do it in 64 bits.