This will be just short example of using Windows 7 Virtual disk API functions. Although I also gave full example, quite a few people asked me for shorter example which will just illustrate operations that will be used most often - open and attach.

Here it is:

string fileName = @"C:\test.vhd";

IntPtr handle = IntPtr.Zero;

var openParameters = new OPEN_VIRTUAL_DISK_PARAMETERS();

openParameters.Version = OPEN_VIRTUAL_DISK_VERSION.OPEN_VIRTUAL_DISK_VERSION_1;

openParameters.Version1.RWDepth = OPEN_VIRTUAL_DISK_RW_DEPTH_DEFAULT;

var openStorageType = new VIRTUAL_STORAGE_TYPE();

openStorageType.DeviceId = VIRTUAL_STORAGE_TYPE_DEVICE_VHD;

openStorageType.VendorId = VIRTUAL_STORAGE_TYPE_VENDOR_MICROSOFT;

int openResult = OpenVirtualDisk(ref openStorageType, fileName, VIRTUAL_DISK_ACCESS_MASK.VIRTUAL_DISK_ACCESS_ALL, OPEN_VIRTUAL_DISK_FLAG.OPEN_VIRTUAL_DISK_FLAG_NONE, ref openParameters, ref handle);

if (openResult != ERROR_SUCCESS) {

throw new InvalidOperationException(string.Format(CultureInfo.InvariantCulture, "Native error {0}.", openResult));

}

var attachParameters = new ATTACH_VIRTUAL_DISK_PARAMETERS();

attachParameters.Version = ATTACH_VIRTUAL_DISK_VERSION.ATTACH_VIRTUAL_DISK_VERSION_1;

int attachResult = AttachVirtualDisk(handle, IntPtr.Zero, ATTACH_VIRTUAL_DISK_FLAG.ATTACH_VIRTUAL_DISK_FLAG_PERMANENT_LIFETIME, 0, ref attachParameters, IntPtr.Zero);

if (attachResult != ERROR_SUCCESS) {

throw new InvalidOperationException(string.Format(CultureInfo.InvariantCulture, "Native error {0}.", attachResult));

}

CloseHandle(handle);

System.Windows.Forms.MessageBox.Show("Disk is attached.");

Of course, in order for this to work, few P/Interop definitions are needed:

public const Int32 ERROR_SUCCESS = 0;

public const int OPEN_VIRTUAL_DISK_RW_DEPTH_DEFAULT = 1;

public const int VIRTUAL_STORAGE_TYPE_DEVICE_VHD = 2;

public static readonly Guid VIRTUAL_STORAGE_TYPE_VENDOR_MICROSOFT = new Guid("EC984AEC-A0F9-47e9-901F-71415A66345B");

public enum ATTACH_VIRTUAL_DISK_FLAG : int {

ATTACH_VIRTUAL_DISK_FLAG_NONE = 0x00000000,

ATTACH_VIRTUAL_DISK_FLAG_READ_ONLY = 0x00000001,

ATTACH_VIRTUAL_DISK_FLAG_NO_DRIVE_LETTER = 0x00000002,

ATTACH_VIRTUAL_DISK_FLAG_PERMANENT_LIFETIME = 0x00000004,

ATTACH_VIRTUAL_DISK_FLAG_NO_LOCAL_HOST = 0x00000008

}

public enum ATTACH_VIRTUAL_DISK_VERSION : int {

ATTACH_VIRTUAL_DISK_VERSION_UNSPECIFIED = 0,

ATTACH_VIRTUAL_DISK_VERSION_1 = 1

}

public enum OPEN_VIRTUAL_DISK_FLAG : int {

OPEN_VIRTUAL_DISK_FLAG_NONE = 0x00000000,

OPEN_VIRTUAL_DISK_FLAG_NO_PARENTS = 0x00000001,

OPEN_VIRTUAL_DISK_FLAG_BLANK_FILE = 0x00000002,

OPEN_VIRTUAL_DISK_FLAG_BOOT_DRIVE = 0x00000004

}

public enum OPEN_VIRTUAL_DISK_VERSION : int {

OPEN_VIRTUAL_DISK_VERSION_1 = 1

}

[StructLayout(LayoutKind.Sequential, CharSet = CharSet.Unicode)]

public struct ATTACH_VIRTUAL_DISK_PARAMETERS {

public ATTACH_VIRTUAL_DISK_VERSION Version;

public ATTACH_VIRTUAL_DISK_PARAMETERS_Version1 Version1;

}

[StructLayout(LayoutKind.Sequential, CharSet = CharSet.Unicode)]

ublic struct ATTACH_VIRTUAL_DISK_PARAMETERS_Version1 {

public Int32 Reserved;

}

[StructLayout(LayoutKind.Sequential, CharSet = CharSet.Unicode)]

public struct OPEN_VIRTUAL_DISK_PARAMETERS {

public OPEN_VIRTUAL_DISK_VERSION Version;

public OPEN_VIRTUAL_DISK_PARAMETERS_Version1 Version1;

}

[StructLayout(LayoutKind.Sequential, CharSet = CharSet.Unicode)]

public struct OPEN_VIRTUAL_DISK_PARAMETERS_Version1 {

public Int32 RWDepth;

}

public enum VIRTUAL_DISK_ACCESS_MASK : int {

VIRTUAL_DISK_ACCESS_ATTACH_RO = 0x00010000,

VIRTUAL_DISK_ACCESS_ATTACH_RW = 0x00020000,

VIRTUAL_DISK_ACCESS_DETACH = 0x00040000,

VIRTUAL_DISK_ACCESS_GET_INFO = 0x00080000,

VIRTUAL_DISK_ACCESS_CREATE = 0x00100000,

VIRTUAL_DISK_ACCESS_METAOPS = 0x00200000,

VIRTUAL_DISK_ACCESS_READ = 0x000d0000,

VIRTUAL_DISK_ACCESS_ALL = 0x003f0000,

VIRTUAL_DISK_ACCESS_WRITABLE = 0x00320000

}

[StructLayout(LayoutKind.Sequential, CharSet = CharSet.Unicode)]

public struct VIRTUAL_STORAGE_TYPE {

public Int32 DeviceId;

public Guid VendorId;

}

[DllImport("virtdisk.dll", CharSet = CharSet.Unicode)]

public static extern Int32 AttachVirtualDisk(IntPtr VirtualDiskHandle, IntPtr SecurityDescriptor, ATTACH_VIRTUAL_DISK_FLAG Flags, Int32 ProviderSpecificFlags, ref ATTACH_VIRTUAL_DISK_PARAMETERS Parameters, IntPtr Overlapped);

[DllImportAttribute("kernel32.dll", SetLastError = true)]

[return: MarshalAsAttribute(UnmanagedType.Bool)]

public static extern Boolean CloseHandle(IntPtr hObject);

[DllImport("virtdisk.dll", CharSet = CharSet.Unicode)]

public static extern Int32 OpenVirtualDisk(ref VIRTUAL_STORAGE_TYPE VirtualStorageType, String Path, VIRTUAL_DISK_ACCESS_MASK VirtualDiskAccessMask, OPEN_VIRTUAL_DISK_FLAG Flags, ref OPEN_VIRTUAL_DISK_PARAMETERS Parameters, ref IntPtr Handle);

I think that this is as short as it gets without hard-coding values too much.

If you hate copy/paste, you can download this code sample. Notice that this code only opens virtual disk. If you want to create it or take a look at more details, check full code sample.

P.S. Notice that this code will work with Windows 7 RC, but not with beta (API was changed in meantime).

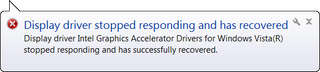

P.P.S. If you get “Native error 1314.” exception, you didn’t run code as user with administrative rights. If you get “Native error 32.”, virtual disk is already attached. Just go to Disk Management console and select Detach VHD on right-click menu.