If your ISP offers IPv6 and you have Mikrotik router, it would be shame not to make use of it. My setup assumes you get /64 prefix from your ISP (Comcast in my case) via DHCPv6. Also assumed is empty IPv6 configuration.

First I like to disable default neighbor discovery interface. Blasting IPv6 router advertisements on all intefaces is not necessarily a good idea:

/ipv6 nd

set [ find default=yes ] disabled=yes

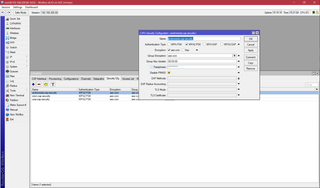

Next step is to setup DHCP client. Withing a few seconds, you should see the prefix being allocated:

/ipv6 dhcp-client

add add-default-route=yes interface=ether1 pool-name=^^general-pool6^^ request=prefix

:delay 5s

print

Flags: D - dynamic, X - disabled, I - invalid

# INTERFACE STATUS REQUEST PREFIX

0 ether1 bound prefix ^^2601:600:9780:ee2c::/64^^, 3d14h41m41s

At this time I love to allocate address ending with ::1 to the router itself:

/ipv6 address

add address=::1 from-pool=^^general-pool6^^ interface=^^bridge1^^ advertise=yes

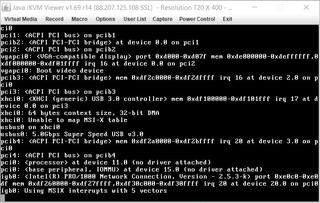

Now it should be possible to ping its address from external computer (in this example address would be 2601:600:9780:ee2c::1). If this doesn’t work, do check if you have link-local addresses. If none are present, reboot the router and they will be regenerated.

With router reachable, it is time to delegate IPv6 prefix to internal machines too. For this purpose, setup RA (router announcement) over the bridge. While default interval settings are just fine, I like to make them a bit shorter (20-60 seconds):

/ipv6 nd

add interface=^^bridge1^^ ra-interval=20s-60s

And that’s all. Now your computers behind the router will have direct IPv6 route to the Internet. Do not forget to setup both router firewall and firewall of individual devices. There is no NAT to save your butt here.

PS: Here is the basic IPv6 firewall allowing all connections out while allowing only established back in:

/ipv6 firewall filter

add chain=input action=drop connection-state=invalid comment="Drop (invalid)"

add chain=input action=accept connection-state=established,related comment="Accept (established, related)"

add chain=input action=accept in-interface=ether1 protocol=udp src-port=547 limit=10,20:packet comment="Accept DHCP (10/sec)"

add chain=input action=drop in-interface=ether1 protocol=udp src-port=547 comment="Drop DHCP (>10/sec)"

add chain=input action=accept in-interface=ether1 protocol=icmpv6 limit=10,20:packet comment="Accept external ICMP (10/sec)"

add chain=input action=drop in-interface=ether1 protocol=icmpv6 comment="Drop external ICMP (>10/sec)"

add chain=input action=accept in-interface=!ether1 protocol=icmpv6 comment="Accept internal ICMP"

add chain=input action=drop in-interface=ether1 comment="Drop external"

add chain=input action=reject comment="Reject everything else"

add chain=output action=accept comment="Accept all"

add chain=forward action=drop connection-state=invalid comment="Drop (invalid)"

add chain=forward action=accept connection-state=established,related comment="Accept (established, related)"

add chain=forward action=accept in-interface=ether1 protocol=icmpv6 limit=20,50:packet comment="Accept external ICMP (20/sec)"

add chain=forward action=drop in-interface=ether1 protocol=icmpv6 comment="Drop external ICMP (>20/sec)"

add chain=forward action=accept in-interface=!ether1 comment="Accept internal"

add chain=forward action=accept out-interface=ether1 comment="Accept outgoing"

add chain=forward action=drop in-interface=ether1 comment="Drop external"

add chain=forward action=reject comment="Reject everything else"