Rolling Your Own

Probably every programmer had a phase when he started to develop his own encryption algorithm. It was probably early in his professional life when he learnt about XOR and the magic it does. Most programmers soon after realize that they are not cryptographers and that their algorithm is shitty at the best. Those who don’t usually work on DRM later (and those things are never broken, are they?)

Professional programmers know that any person can invent a security system so clever that she or he can’t think of how to break it. They heavily rely on a published standards and make their applications work accordingly. Cryptographers take care of encryption algorithms, programmers take care of implementation part and the world is a more secure place.

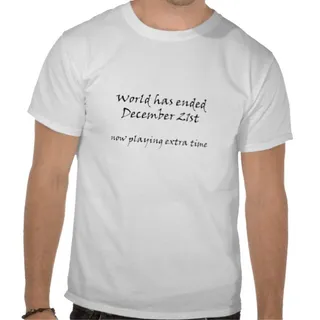

But it makes me wonder, are we approaching this all wrong? In a spy-happy world where NSA seems to influence security standards and where bulk decryption seems to be a reality, I would argue that own encryption has some benefits.

Since bulk collection relies on all data being in similar format, anything you can do to foil this actually makes you invisible. Let’s assume that AES is broken (don’t worry; it is not). Anyone relying on standard AES would be affected. But if some wise-ass just did XOR with 0xAA there is high probability that his data would skip the collection.

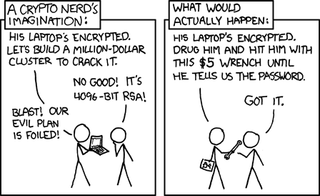

Mind you stupid encryption is still stupid. And if you are targeted by NSA there is high probability that they will get the data regardless of what you do. If you are using some homegrown encryption, it will be broken. However, they will be unable to take this data in an automatic manner. Enough people doing this would mean they need to dedicate human resources for every shitty algorithm out there. And you are probably not important enough to warrant such attention.

Probably smarter choice would be using two encryption algorithms, back to back. You can use Rijndael to encrpyt data once, then use another key (maybe derived via Tiger) with a Twofish. I am quite comfortable saying that this encryption will not be broken by any automatic means. System might have huge gaping holes, but it will require human to find them.

Of course, once you start doing your “twist” on encryption method you suddenly become completely incompatible with all other “twists” out there. Implementations will become slower (yep, double encrypting stuff costs). Implementing two encryption algorithms will not really protect you against targeted attach where e.g. trojan can get used to steal your password and circumvent all that encryption. Nobody will bother to do cryptoanalysis on your exact combination so you are pretty much flying in the dark. And probably another bad thing or two I forgot.

However, there is something attractive in rolling your own encryption using standardized cipher blocks for data you deem important (e.g. password storage). Not only that it is an interesting defense but it also gives you an enjoyment of doing something you know you shouldn’t.

PS: Never take cryptography advice from a random guy on the Internet.