Native ZFS Encryption Speed (Ubuntu 22.10)

There is a newer version of this post.

I guess it’s that time of year when I do ZFS encryption testing on the latest Ubuntu. Is ZFS speed better, worse, or the same?

Like the last time, I did testing on Framework laptop with i5-1135G7 processor and 64GB of RAM. The only change is that I am writing a bit more data during the test this time. Regardless, this is still mostly an exercise in relative numbers. Overall, the procedure is still basically the same as before.

With all that out of way, you can probably just look at the 22.04 figures and be done. While there are some minor differences between 22.10 and 22.04, there is nothing big enough to change any recommendation here. Even ZFS version reflects this as we see only a tiny bump from zfs-2.1.2-1ubuntu2 to zfs-2.1.5-1ubuntu2.

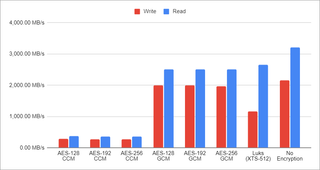

ZFS GCM is still the fastest when it comes to writing and it wins over LUKS by a wide margin. It was a surprise when I saw it with 22.04 and it’s a surprise still. The surprise is not that ZFS is fast but why the heck is LUKS so slow. When it comes to reading speed, LUKS is still slightly faster but not by a wide margin.

With everything else the same, I would say ZFS GCM is a clear winner here with LUKS coming close second if you don’t mind slower write speed. I would expect both to be indistinguishable when it comes to real-world scenarios most of the time.

Using CCM encryption doesn’t make much sense at all. While encryption speed seems to benefit from disabling AES (part of which I suspect to be an artefact of my test environment), it’s just a smidgen faster than GCM. Considering that any upgrade in future is going to bring AES instruction set, I would say GCM is the way forward.

Of course, the elephant in the room is the fact the native ZFS encryption doesn’t actually cover all metadata. The only alternative that can help you with that is running ZFS on top of LUKS encryption. I honestly go back and forth between two with current preference being toward LUKS on laptop and the native ZFS encryption on servers where encrypted send/receive is a killer feature.

You can take a peek at the raw data and draw your own conclusions. As always, just keep in mind that these are just limited synthetic tests intended just to give you ballpark figure. Your mileage may vary.