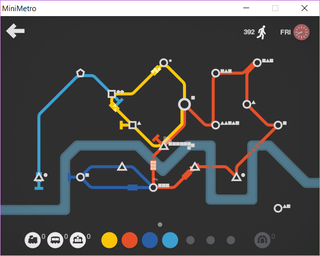

Mini Metro

I don’t play often nor I have a long gaming sessions anymore. Yes, I do throw occasional multiplayer game with my kids but but solo-plays are rare and far between. Most of the games I buy are the old ones I get for nostalgic reasons; rarely I buy a new game. Yep, definitely, I’m getting old.

However, these holidays one game peeked my curiosity. Same as with FTL, something simply “clicked” and, with reasonable $10, at GoG.com I saw no reason not to try it out. And boy, is this game fun.

Premise is easy - you create a subway lines to keep various shapes moving to their destination. With time your city grows, you get more lines to deal with, and eventually you fail. If you got emotionally connected to your town, you can continue playing even after you lose or you can decide to call it quits. Game is as simple as you can probably make it to still be interesting. But it is a loads of fun.

And game is completely DRM and Internet free. It might be just a pet peeve of mine but I hate when game requires Internet for no good reason (yes Starcraft II, I am looking at you) and when I cannot play game on whichever computer I feel like. Yes, DRM can be done right - Torchlight II is a nice example of a game that is minimally invasive. But there is something better than a well implemented DRM and that is no DRM at all. And any game that treats its users fairly, deserves to be bought.