POST Cannot Be Redirected

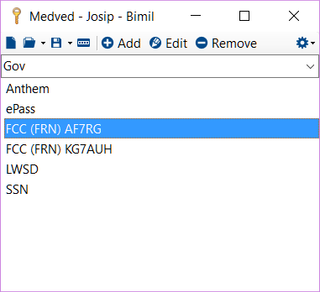

Few days ago I’ve found a bug in a program of mine. As I have feedback built-in in most programs, I decided to use it for once. And failed. All I’ve got was an error message.

A bit of troubleshooting later and I’ve narrowed the problem down. It would work perfectly well over HTTPS but it would fail on HTTP. Also it would fail when redirected from my old domain, whether it was HTTP or HTTPS. And failure was rather unusual error code 418. That was an error I’m often using to signal something wrong with redirects. A bit of a digging later, I’ve noticed I was using POST method for my error reporting (duh!).

You cannot redirect POST requests. And I was doing server-side redirecting (or trying to) from my old domain to a new one and from HTTP to HTTPS.

At the end I’ve changed all my programs to use HTTPS, temporarily disabled redirecting to allow HTTP-only connections, and I’ve had to re-enable same script on my old site so I can get error reports from old versions without update. I knew this last domain move has gone too smooth…