VPN-only Internet Access on Linux Mint 18.3 Via Private Internet Access

Setting up Private Internet Access VPN is usually not a problem these days as Linux version is readily available among the supported clients. However, such installation requires GUI. What if we don’t want or need one?

For setup to work independently of GUI, one approach is to use OpenVPN client usually installed by default. Also needed are PIA’s IP-based OpenVPN configuration files. While this might cause issues down the road if that IP changes, it does help a lot with security as we won’t need to poke an unencrypted hole (and thus leak information) for DNS.

From the downloaded archive extract .crt and .pem files followed by your choice of .ovpn file (usually going with the one physically closest to you). Copy them all to your desktop to be used later. Yes, you can use any other directory - this is just the one I’ll use in example commands below.

Rest of the VPN configuration needs to be done from the Bash (replacing username and password with actual values):

sudo mv ~/Desktop/*.crt /etc/openvpn/

sudo mv ~/Desktop/*.pem /etc/openvpn/

sudo mv ~/Desktop/*.ovpn /etc/openvpn/client.conf

sudo sed -i "s*ca *ca /etc/openvpn/*" /etc/openvpn/client.conf

sudo sed -i "s*crl-verify *crl-verify /etc/openvpn/*" /etc/openvpn/client.conf

sudo echo "auth-user-pass /etc/openvpn/client.login" >> /etc/openvpn/client.conf

sudo echo "mssfix 1400" >> /etc/openvpn/client.conf

sudo echo "dhcp-option DNS 209.222.18.218" >> /etc/openvpn/client.conf

sudo echo "dhcp-option DNS 209.222.18.222" >> /etc/openvpn/client.conf

sudo echo "script-security 2" >> /etc/openvpn/client.conf

sudo echo "up /etc/openvpn/update-resolv-conf" >> /etc/openvpn/client.conf

sudo echo "down /etc/openvpn/update-resolv-conf" >> /etc/openvpn/client.conf

unset HISTFILE

echo '^^username^^' | sudo tee -a /etc/openvpn/client.login

echo '^^password^^' | sudo tee -a /etc/openvpn/client.login

sudo chmod 500 /etc/openvpn/client.loginTo test VPN connection execute:

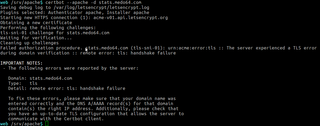

sudo openvpn --config /etc/openvpn/client.confAssuming test was successful (i.e. resulted in Initialization Sequence Completed message), we can further make sure data is actually traversing VPN. I’ve found whatismyipaddress.com quite helpful here. Just check if IP detected is different then IP you usually get without VPN.

Stop the test connection using Ctrl+C and proceed to configure OpenVPN’s auto-startup:

echo "AUTOSTART=all" | sudo tee -a /etc/default/openvpn

sudo rebootOnce computer has booted and no further VPN issues have been observed, you can also look into disabling the default interface when VPN is not active. Essentially this means traffic is either going through VPN or not going at all.

Firewall rules are to allow data flow only via VPN’s tun0 interface with only encrypted VPN traffic being allowed on port 1198.

sudo ufw reset

sudo ufw default deny incoming

sudo ufw default deny outgoing

sudo ufw allow out on tun0

sudo ufw allow out on `route | grep '^default' | grep -v "tun0$" | grep -o '[^ ]*$'` proto udp to `cat /etc/openvpn/client.conf | grep "^remote " | grep -o ' [^ ]* '` port 1198

sudo ufw enableThis should give you quite secure setup without the need for GUI.