I am in love with my Windows 7 installation, but I do have occasional need to go into dark alleys of system administration. With Hyper-V as a bait, it is really hard to resist.

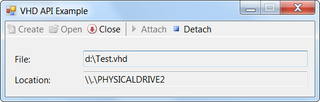

In order to keep everything simple, I don’t have dedicated partition for it. I just create VHD file and install into it. It behaves like normal installation, but without all hassle with disk repartitioning.

I also found that quickest way to do this is filling VHD directly from install CD. After that, I just copy already existing boot loader entry and change it a little.

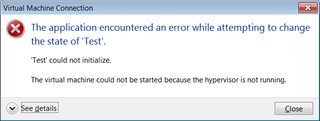

After installing Windows Server 2008 R2 in this manner and additional installation of Hyper-V role, everything seemed fine. Creating virtual machine went without a hitch. Only when I tried to start it, I got “The application encountered an error while attempting to change the state of ‘Test’”. Third line held a issues “The virtual machine could not be started because hypervisor is not running.”. Looking into details, there was one possible cause that applied here - incorrect boot configuration.

Underlying problem was that I copied Windows 7 boot loader configuration as base for my Windows Server 2008 R2. Configurations are quite similar except for one crucial detail - there is no hypervisorlaunchtype value name in Windows 7. This is not a big problem once and there is simple command to take care of it:

bcdedit /set "{identifier}" hypervisorlaunchtype "auto"

The operation completed successfully.

Identifier is of course guid of Windows Server 2008 R2 boot loader (you can find it out with bcdedit /v). If you want to change currently running instance, you can omit identifier altogether.

After one reboot, virtual machine starts without problems.