Windows 10 releases are numerous. If you are using Microsoft Media Creation Tool to download ISOs, you know how hard is to track them. Fortunately, it is possible to get information about version from ISO file itself.

First order of business is mounting downloaded ISO file. It is as easy as double clicking on it.

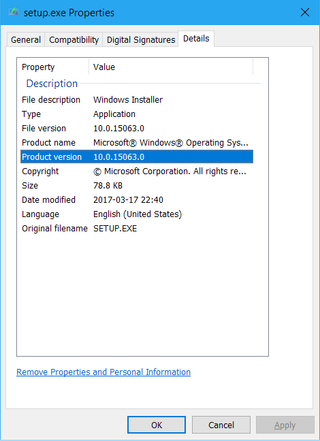

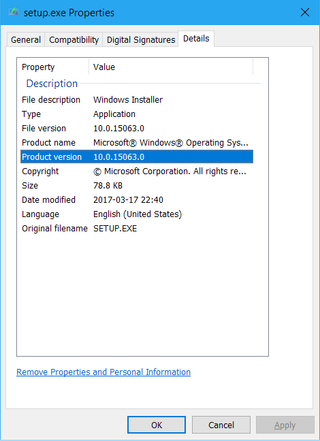

Then find Setup.exe; right-click; Properties; and go to Details tab. There under product version you will find the build number - in my case it was 15063.

If you want to know more (e.g. which editions are present in .iso file) we need to open Administrator command prompt (or PowerShell) and run [dism](https://docs.microsoft.com/en-us/windows-hardware/manufacture/desktop/what-is-dism). I will assume, ISO is mounted as disk W: and that your download includes both 32-bit and 64-bit Windows. Adjust path to install.wim as needed.

dism /Get-WimInfo /WimFile:W:\x64\sources\install.esd

Deployment Image Servicing and Management tool

Version: 10.0.15063.0

Details for image : W:\x64\sources\install.esd

Index : 1

Name : Windows 10 Pro

Description : Windows 10 Pro

Size : 15,305,539,033 bytes

Index : 2

Name : Windows 10 Home

Description : Windows 10 Home

Size : 15,127,824,725 bytes

Index : 3

Name : Windows 10 Home Single Language

Description : Windows 10 Home Single Language

Size : 15,129,601,869 bytes

Index : 4

Name : Windows 10 Education

Description : Windows 10 Education

Size : 15,125,050,322 bytes

The operation completed successfully.

As you can see, this disk consists of four editions. Which one gets installed is determined based on your product key.

And you can go even further with investigation, if you give it index parameter:

dism /Get-WimInfo /WimFile:^^W:\x64\sources\install.esd^^ /index:^^1^^

Deployment Image Servicing and Management tool

Version: 10.0.15063.0

Details for image : W:\x64\sources\install.esd

Index : 1

Name : Windows 10 Pro

Description : Windows 10 Pro

Size : 15,305,539,033 bytes

WIM Bootable : No

Architecture : x64

Hal :

Version : 10.0.15063

ServicePack Build : 0

ServicePack Level : 0

Edition : Professional

Installation : Client

ProductType : WinNT

ProductSuite : Terminal Server

System Root : WINDOWS

Directories : 19668

Files : 101896

Created : 2017-03-18 - 19:40:43

Modified : 2017-08-26 - 21:33:30

Languages :

en-US (Default)

The operation completed successfully.