There is an article in Washington Post saying that Windows 8 is 84% less frustrating than Windows 7. It probably comes from same world where 98% of all statistics are manufactured or “adjusted”.

I will admit outright that I have small sample size of 2+. One is me (yes, I am running Windows 8 again) and my wife is second (running Windows 8 on her netbook). Plus signifies anecdotal frustration evidence from friends. For purpose of this article I will disregard all troubles I already menioned and stick to single one: Fitts’s law.

When working at desktop people are used to having significant things in corner. We have close button in upper-right, application icon in upper-left (double-click functions as close here), we had start button in lower-right and show desktop in lower-left. With Windows 8 left side of deal got broken.

When I try to close application by double-clicking its icon, I never manage to do it. Stupid task switcher pops out and brings me in another application. Starting first application in taskbar has same issue. It is just too easy to switch with mouse (and especially with trackpad) to start screen.

Even if you try to stick to new Windows UI you will get into trouble. Regardless of how significant new UI was to Microsoft, they haven’t bothered to transfer all internal applications to it. You cannot work more than 5 minutes on anything without being thrown back into a desktop. And all those context switches are exhausting.

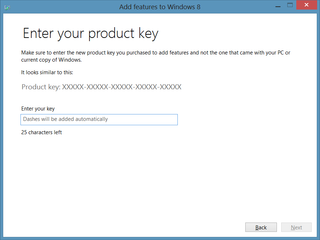

Best thing that Vista brought was search from within Start menu. We have same thing in Windows 8 but segregated into Apps, Settings and Files. Yes, now you need to know where thing you are searching is. And start screen soon becomes unwieldy mess because every single application gets it icons there. Yes, applications did the same to Start Menu in Windows 7 but I didn’t need to look at it the whole time.

I will not even get into picture viewer that makes it impossible to view next image or mail application that shows first mail and selected one upon clicking. Most of applications in the new UI are probably designed by bunch of non-supervised interns since their basic functionality is usually not working. And good luck finding alternative in Store that is as deserted as church on Friday night.

I agree that on tablets this all works and Windows 8 will probably be 84.07692307692308% better on them. I will also agree that insides of Windows 8 make it most powerful system out there. However, I don’t recall one person saying that something in Windows 8 does not frustrate them. And, mind you, these are users that had Windows 8 for couple of months now.

It just tell that as soon as you assign percents to satisfaction, you are just being a jackass.