My wired network finally got too big for a single router so I decided to get myself a switch.

I realistically needed the dumbest switch there is - just 4 gigabit ports and I would be happy. Thus my eyes were immediately drawn to TP-Link SG105 at $20 on Amazon. However, for only $10 more I noted one could get SG105E. The exactly same switch but with a basic manageability features.

Both switches look exactly the same in their steel shell. They are well built and my impression is they can take a beating. You can get inside the chassis by simply undoing two screws and you will see a really simple board. Based on the components, I don’t think you can get much over 1 Gbps on its bus and thus forget about actually reaching maximum speed when all ports are in use - acceptable compromise for home I guess. I would say 9V power supply is the only thing that actually looks cheap. Fortunately, switch works without any noticeable issues on much more common 12V too (albeit you probably forfeit warranty if you do that).

So, what do you get for extra money? Well, you get DSCP, a QoS priority system nobody seems to use in general and definitely not intended for home network. There is also rate limiting with a storm control. Probably not often needed at home but can be quite useful for troubleshooting naughty device.

Further more you get support for up to 32 VLANs - quite nice if your home network needs a bit of separation. Lastly you will also find more “enterprisey” features like port mirroring and link aggregation. Never figured why you need something like this on 5-port switch but I guess it doesn’t hurt to have them.

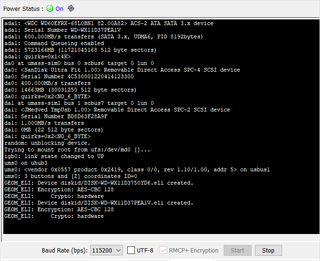

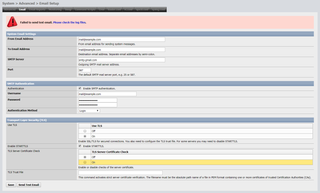

The most useful feature, and the reason I decided to give extra tenner was the GUI. From GUI you can easily see if your cable is connected, whether packets are flowing, and are there any transmission errors. Usually home switches and routers have ugly interface so I was ready for that. What I wasn’t ready for is abysmal security.

Let’s start with a good thing - you can change user name. Security-wise, that is probably the best thing you can do in your network to escape 95% automated attacks. Yes, this won’t help much if someone is “out to get you” but most script kiddies will be thwarted. And that’s as far as security goes for this device.

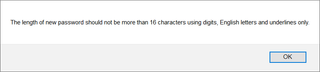

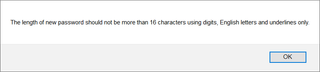

To start with, your password is restricted to English alphabet, digits, and underscore (_) sign. Restricting the length and character set is not significant just because it lowers number of combinations your password can take. I am sure you are using password manager and even these weak rules should give you years of good sleep if password is hashed.

But, if they used hashed passwords in the first place, they wouldn’t need character set restrictions. These restrictions are almost always a signal your password is saved in a clear-text. Combined with the login screen allowing for infinite number of guesses at unthrottled speed, and you have the whole security tumbling down.

But don’t worry anybody will brute force this device. Nope - there is no need as you can simply snoop all communication as there is no support for HTTPS. Everything you do on its web interface is for everybody to see. They didn’t even bother to do a simple digest authentication. Nope, all is sent in clear text.

For $20 it is hard not to recommend base model of this switch. It is sturdy, cheap, and reasonably performant. Unfortunately, for only $10 more you can get a device performing the same base function but with a woefully insecure user interface.

I would stick with unmanaged model.