If you are into electronics, probably the first test device you’ll own will be a multimeter. Not only you can get it for dirt cheap - my first multimeter was less than $10 in today’s money - but you can also do a lot in digital electronics with multimeter alone.

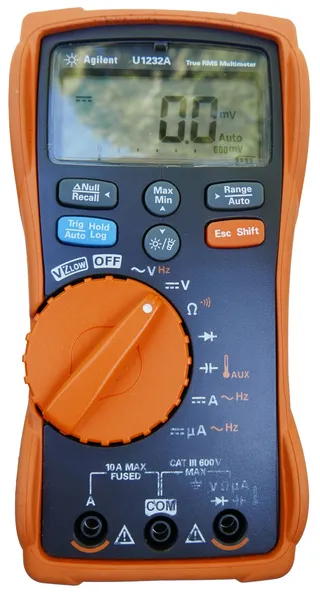

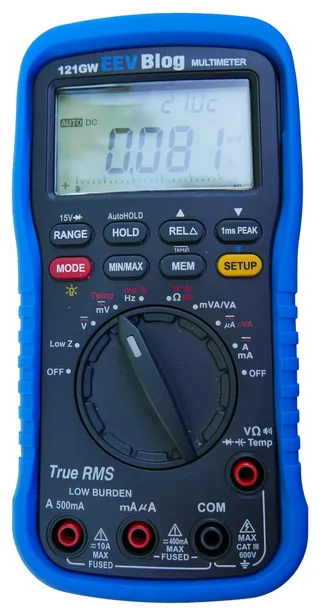

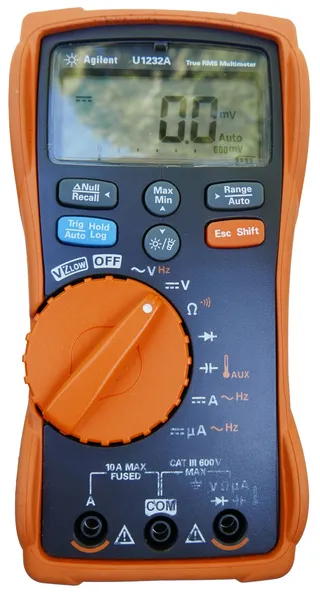

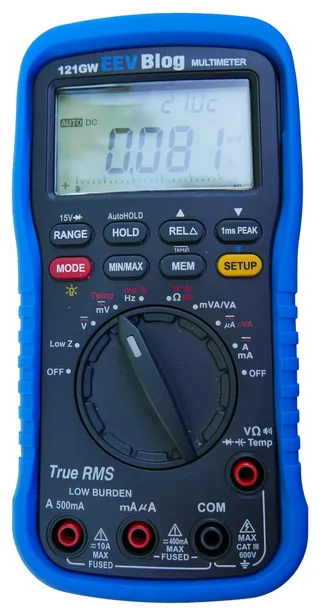

As I pawed my road to electronics with quite a few good and bad multimeters, I want to share my thoughts on what is important for a decent general purpose multimeter. I will stick to Agilent/Keysight U1232A and EEVBlog 121GW for most of my comparisons as these are two multimeters I use these days. However, principles are general and can be applied to any multimeter.

The very first thing I need of multimeter is to be safe. When I just started with electronics I didn’t care about that because I worked with low DC voltages only. However, sooner or later I would measure something on 240 V line. And not all multimeters would survive - some failing spectacularly. Having kids of curious age I like to have all my meters CAT rated and UL listed. And most of good meters will not die even if you do something utterly stupid like connecting to line voltage while in current range. Higher cost does offer higher survivability.

The next thing I find very important is speed. Having a high digit count is nice but it usually comes at the cost of speed. I find that 6000 count on my Agilent is much more comfortable for general measurement than 50000 counts EEVBlog has to offer. Yes, high digit count is important for certain scenarios and it’s not too bad to have one such meter available. But, if I had to choose one multimeter, I would go for a faster one. That said, don’t go under 6000 counts.

In regards to speed, a special attention should be shown toward continuity check. Regardless of the display update speed, continuity tester must be fast and latched. Even the most minute contact between probes has to result in the (low volume, please) beep or flash. While most people prefer beep, I find backlight flash really great but that might be due to most of my work taking place during night when flash is easily visible and laud noises are not really desired. Speaking of flash, I love option to turn backlight with a single button. If you ask me, I would set all my multimeters to have backlight by default - battery life be damned - but I will be OK if I can turn light on easily.

I prefer when each measurement is a separate selection on a range switch as it makes switching between them a breeze. Multimeters with a lot of options tend to have just a few ranges but with Mode button switching between them. 121GW is specially nasty in this area as, not only it requires mode button but it also remembers the last mode selected. While this sounds like a good idea, it ensures that I need to check every time by looking onto display (that requires long press for backlight) before doing any work. This alone is probably the major reason why my Agilent sees much more use despite 121GW having more functionality and better specs.

Speaking about more functionality, I found 121GW’s mV range a real gem. If your multimeter gives you decent resolution it can pretty much substitute measuring current altogether. Just measure voltage drop over a resistor (or fuse) and you have all that’s needed to calculate current. Much easier than switching to current range and inserting multimeter into the circuit. Yes, this won’t work if you need precise and/or low-current measurement, but it works well to get ballpark figure and that’s often all that’s needed anyhow.

And order of measurements on the range switch is equally important. I prefer my order to be Low-Z voltage measurement, Off, DC voltage, AC voltage, other voltage related measurements, current, and finally the second off position. While this does prevent me from easily turning off multimeter by just moving switch into the far left position, it enables me to setup all on board and go into voltage measurement directly without traveling through Low-Z mode. While I love low-Z mode, I do not like when I have to go over it from off position (Agilent did this beautifully). Speaking of low-Z, idiotic restrictions like 12V minimum voltage for it to work 121GW has make it more nuisance than an useful range.

If you are into temperature measurement, you will want support for standard K-type probes. Agilent here fails miserably as it requires special adapter to do it. My personal opinion is that, if any special adapter is required for measurement, that functionality might as well not exist.

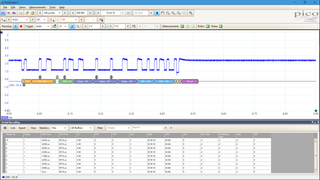

Functionality I found awesome but it’s rarely implemented these days is a simple frequency counter. Yes, both U1232A and 121GW can measure frequency but range is laughably small (up to 100 kHz and 1 MHz respectively). When you deal with PWM circuits or you just want to check clock lines, a decent frequency measurement (like UT71C has - up to 40+ MHz) means you don’t need to pull out the scope most of the time.

Having the low burden voltage is an excellent thing in theory as it can mean a difference between being able to measure circuit’s current or not. In practice it depends on your use case. For me, working on 3.3V and 5V circuits, there is more than enough margin to handle any drop multimeter brings in.

Diode measurement should be a separate range so LED (or any other diode) polarity can be tested without much effort. If you are dealing with LEDs a lot, investing in multimeter that supports higher test voltage (like 15V on 121GW) does come in handy.

Other features worth mentioning include True RMS and auto-hold functionality. I found that not having either is not a breaking deal but they do come in useful once in a while.

I do require support for either AA or AAA batteries in any multimeter I use. Not only 9V has generally inferior capacity but it’s also something I don’t ever have when I need it. There is simply no justification this day and age why standard batteries shouldn’t be used. And battery compartment should have a captive screw unless you want to spend 15 minutes searching for it every time you change the batteries.

Every decent multimeter will have a way of connecting to the computer for logging purposes. While bluetooth seems like a good idea, I found it lacking in practice as I would often find something would go wrong with it overnight. A nice physical cable is my preferred solution here.

In regards to the probes I find silicone is a must as stiff probe cables will be a hindrance. Tips have to be sharp and ideally their CAT III/IV insulation should be removable. Fully insulated probe is fine when dealing with high voltages or narrow spaces but often more exposed metal makes for easier measurement.

Unfortunately, finding the multimeter with all these features is hard if not impossible. Every multimeter - regarless of the price range - will have something missing. However, with two multimeters you can come darn close.

PS: Notice I haven’t spoke about precision at all as needs are highly dependent on the exact use case. For me 1% on DC is OK and this is something easily found in any decent multimeters.

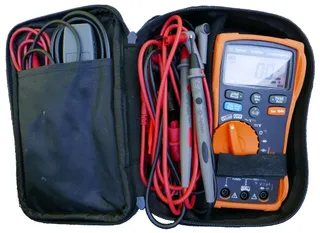

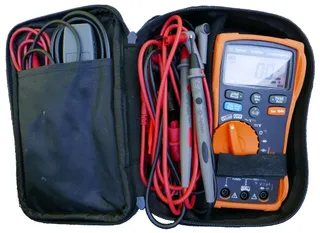

PPS: One enjoyable thing you cannot really know until you see it also how multimeter fits in its carry case. Agilent is perfect here as you have range switch, all buttons, and probe holes reachable without ever getting it out.