SIGFAULT in QVector size()

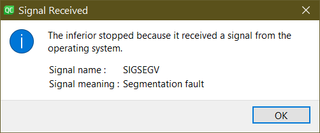

After a long time, I started playing with C++ again - this time in the form of QT. Since I learned C++ a while ago, my code was a bit rustic and probably not following all the newest recommendations, but hey, it worked. Until I decided to change it from using QVector<std::shared_ptr<FolderItem>> to QVector<FolderItem*>. Suddenly I kept getting SIGFAULT in the most innocuous method: size().

As Google is my crutch, I did a quick search for this and found absolutely nothing useful - just other people having the same issue. After finally looking at my code, I figured why - because I was asking the wrong question. Of course there was nothing wrong with size() method in QVector class used by millions. It was me all along.

I had _folder variable in main window that I never initialized and I used it to call its method which in turn used QVector.size(). Forgetting to initialize this variable if you’re using shared_ptr is nothing - it simply ends up being null pointer and I had check for those. Once I changed to raw pointer, I lost that protection and uninitialized variable did what uninitialized variable in C++ does - pointed to “something”. Executing function “somewhere” in memory is what brought SIGFAULT - not the poor size() method.

It was all solved by something I used to do reflexively: initialize any variable declared in C++ to some value - even if that value is nullptr.