While I use OSH Park most of the time, I always like to look at different services, especially if they’re US-based. So, of course I took a note of DigiKey’s DKRed. Unfortunately, review will be really short as I didn’t end up using it.

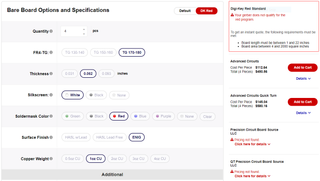

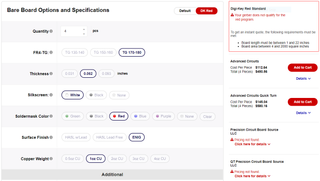

On surface it looks good. Board requirements are reasonable. If you want to use DKRed service you need to have your design fit 5/5 mil minimum - more than sufficient for all I do. While more detailed specifications are available for other PCB vendors on their platform (albeit Royal Circuits has a bad link), there’s nothing about DKRed itself. Yes, I know DKRed might use any of manufacturer’s behind the scene but I would think collating minimum requirements acros all of them should be done by DigiKey and not left to a customer.

And not all limitations are even listed on that page - for example, fact that internal cutouts are not supported is visible only in FAQ and whether slots are supported is left as a complete secret. Compare this to OSH Park’s specification and you’ll see what I mean.

But ok, I went to upload one small board just to test it. And I was greeted by error that length is shorter than 1 inch. As I love making mini boards smaller than 1", I guess I’m out of luck. But specification page did correctly state that fact so I cannot be (too) angry. Never mind - I’ll try a slightly bigger board. Nope - it has to be more than 4 square inches in area. Something that I didn’t find listed anywhere.

Well, I had need for one board 65x72 mm in size - that one would work. And yes, DKRed finds that board OK. But cost is $43.52. OSH Park charges $36.25 for the same board. And yes, DKRed gives 4 boards ($10.88 per board) while OSH Park only provides 3 ($12.08 per board) so it’s slightly cheaper if you really need all 4 boards. If you’re hobbyist requiring only 1 board like me, you’re gonna pay more.

And this is where I stopped my attempts. Breaking deal for me was the minimum size as this makes it a no-go for most of my boards. And cost for just a prototyping is just too high. Mind you, it might be a good deal for people regularly working with bigger boards at a small quantity. But it’s not for me.