While I installed Ubuntu before on my Surface Go, it always came at the cost of removing the Windows. Love them or hate them, Windows are sometime useful so dual boot would be ideal solution. With Surface Go having micro-SD card expansion slot, idea is clear - let’s dual boot Windows on internal disk and Ubuntu on SD card.

While you have Windows still running, prepare two USB drives. One will need to contain Windows installation image you can obtain via Microsoft’s Windows Installation Media Creator. Onto the other write Ubuntu 22.04 image using Rufus utility. Make sure to use GPT partition scheme targeting UEFI systems.

First we need to partition disk and install Linux for which we have to boot from Ubuntu USB drive. To do this go to Recovery Options and select Restart now. From the boot menu then select Use a device and finally use Linpus lite. If you are using Ubuntu, there is no need to disable secure boot or meddle with USB boot order as 22.04 fully supports secure boot (actually Microsoft signs their boot apps). However, you might want to change boot order to have an USB device first as you’ll need this later.

While you could proceed from here with normal Ubuntu install, I like a bit more involved process that includes a bit of command line. Since we need root prompt, we should open Terminal and get those root credentials going.

sudo -i

The very next step should be setting up a few variables - host, user name, and disk(s). This way we can use them going forward and avoid accidental mistakes.

HOST=^^desktop^^

USER=^^user^^

DISK1=/dev/mmcblk0

DISK2=/dev/mmcblk1

Disk setup is really minimal. Notice that both boot and EFI partition will need to be on internal disk as BIOS doesn’t know how to boot from micro-SD card.

blkdiscard -f $DISK1

sgdisk --zap-all $DISK1

sgdisk -n1:1M:+127M -t1:EF00 -c1:EFI $DISK1

sgdisk -n2:0:+640M -t2:8300 -c2:Boot $DISK1

sgdisk --print $DISK1

blkdiscard -f $DISK2

sgdisk --zap-all $DISK2

sgdisk -n1:1M:0 -t1:8309 -c1:Ubuntu $DISK2

sgdisk --print $DISK2

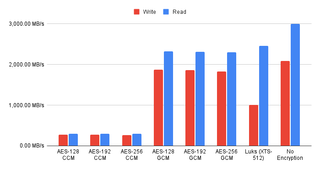

I usually encrypt just the root partition as having boot partition unencrypted does offer advantages and having standard kernels exposed is not much of a security issue.

cryptsetup luksFormat -q --cipher aes-xts-plain64 --key-size 256 \

--pbkdf pbkdf2 --hash sha256 ${DISK2}p1

Since crypt device name is displayed on every startup, for Surface Go I like to use host name here.

cryptsetup luksOpen ${DISK2}p1 ${HOST^}

At last we can prepare all needed partitions.

yes | mkfs.ext4 /dev/mapper/${HOST^}

mkdir /mnt/install

mount /dev/mapper/${HOST^} /mnt/install/

yes | mkfs.ext4 ${DISK1}p2

mkdir /mnt/install/boot

mount ${DISK1}p2 /mnt/install/boot/

mkfs.msdos -F 32 -n EFI -i 4d65646f ${DISK1}p1

mkdir /mnt/install/boot/efi

mount ${DISK1}p1 /mnt/install/boot/efi

To start the fun we need debootstrap package. Do make sure you have Wireless network connected at this time as otherwise operation will not succeed.

apt update ; apt install --yes debootstrap

And then we can get basic OS on the disk. This will take a while.

debootstrap $(basename `ls -d /cdrom/dists/*/ | head -1`) /mnt/install/

Our newly copied system is lacking a few files and we should make sure they exist before proceeding.

echo $HOST > /mnt/install/etc/hostname

sed "s/ubuntu/$HOST/" /etc/hosts > /mnt/install/etc/hosts

sed '/cdrom/d' /etc/apt/sources.list > /mnt/install/etc/apt/sources.list

cp /etc/netplan/*.yaml /mnt/install/etc/netplan/

If you are installing via WiFi, you might as well copy your wireless credentials:

mkdir -p /mnt/install/etc/NetworkManager/system-connections/

cp /etc/NetworkManager/system-connections/* /mnt/install/etc/NetworkManager/system-connections/

Also, since we plan to do dual boot with Widnows, we need to tell Linux to leave local time in BIOS.

echo UTC=no >> /mnt/install/etc/default/rc5

Finally we’re ready to “chroot” into our new system.

ount --rbind /dev /mnt/install/dev

mount --rbind /proc /mnt/install/proc

mount --rbind /sys /mnt/install/sys

chroot /mnt/install \

/usr/bin/env HOST=$HOST USER=$USER DISK1=$DISK1 DISK2=$DISK2 \

bash --login

For new system we need to setup the locale and the time zone.

locale-gen --purge "en_US.UTF-8"

update-locale LANG=en_US.UTF-8 LANGUAGE=en_US

dpkg-reconfigure --frontend noninteractive locales

dpkg-reconfigure tzdata

Now we’re ready to onboard the latest Linux image.

apt update

apt install --yes --no-install-recommends linux-image-generic linux-headers-generic

Followed by boot environment packages.

apt install --yes initramfs-tools cryptsetup keyutils grub-efi-amd64-signed shim-signed

Since we’re dealing with encrypted data, we should auto mount it via crypttab. If there are multiple encrypted drives or partitions, keyscript really comes in handy to open them all with the same password. As it doesn’t have negative consequences, I just add it even for a single disk setup.

echo "${HOST^} UUID=$(blkid -s UUID -o value ${DISK2}p1) none \

luks,discard,initramfs,keyscript=decrypt_keyctl" >> /etc/crypttab

cat /etc/crypttab

To mount boot and EFI partition, we need to do some fstab setup too:

echo "UUID=$(blkid -s UUID -o value /dev/mapper/${HOST^}) \

/ ext4 noatime,nofail,x-systemd.device-timeout=5s 0 1" >> /etc/fstab

echo "PARTUUID=$(blkid -s PARTUUID -o value ${DISK1}p2) \

/boot ext4 noatime,nofail,x-systemd.device-timeout=5s 0 1" >> /etc/fstab

echo "PARTUUID=$(blkid -s PARTUUID -o value ${DISK1}p1) \

/boot/efi vfat noatime,nofail,x-systemd.device-timeout=5s 0 1" >> /etc/fstab

cat /etc/fstab

Now we update our boot environment.

KERNEL=`ls /usr/lib/modules/ | cut -d/ -f1 | sed 's/linux-image-//'`

update-initramfs -u -k $KERNEL

Grub update is what makes EFI tick.

sed -i "s/^GRUB_CMDLINE_LINUX_DEFAULT.*/GRUB_CMDLINE_LINUX_DEFAULT=\"quiet splash \

mem_sleep_default=deep\"/" /etc/default/grub

update-grub

grub-install --target=x86_64-efi --efi-directory=/boot/efi --bootloader-id=Ubuntu \

--recheck --no-floppy

Finally we install out GUI environment. I personally like ubuntu-desktop-minimal but you can opt for ubuntu-desktop. In any case, it’ll take a considerable amount of time.

apt install --yes ubuntu-desktop-minimal

Short package upgrade will not hurt.

add-apt-repository universe

apt update ; apt dist-upgrade --yes

The only remaining task before restart is to create the user, assign a few extra groups to it, and make sure its home has correct owner.

adduser --disabled-password --gecos '' $USER

usermod -a -G adm,cdrom,dip,lpadmin,plugdev,sudo $USER

echo "$USER ALL=NOPASSWD:ALL" > /etc/sudoers.d/$USER

passwd $USER

As install is ready, we can exit our chroot environment.

exit

And unmount our disk:

umount /mnt/install/boot/efi

umount /mnt/install/boot

mount | tac | awk '/\/mnt/ {print $3}' | xargs -i{} umount -lf {}

After the reboot you should be able to enjoy your Ubuntu installation.

reboot

If all went fine, congratulations, you have your Ubuntu up and running. But this is not the end as we still need to get Windows going.

Assuming you adjusted boot order in BIOS to boot of USB device first, just plug in USB drive with Windows 11 installation image and reboot the system to get into the Windows setup. You can also boot it from grub but I find just changing the boot order simpler.

Either way, you can proceed as normal with Windows installation, taking care to select the unassigned disk space on internal drive as install destination. Windows will then use the existing EFI partition to setup boot loader and remaining space for data.

Once you uncheck and delete all the nonsense that Windows installs by default, we need to boot back into Linux. In order to do this, go to Recovery Options and click on Restart now. This should result in boot menu where you should go into Use a device and you should see ubuntu there. If everything went right, this will boot you into Ubuntu.

Technically, if you want Windows to be your primary OS, you can stop at this. However, I want Linux to be default and thus a bit of chicanery is needed. We need to move Microsoft’s boot manager to other location. If you don’t do this, Surface’s BIOS will helpfully use it instead of grub. Removing it sorts this issue.

sudo mv /boot/efi/EFI/Microsoft /boot/efi/EFI/Microsoft2

And now finally we just add Windows boot entry to our grub menu.

cat << EOF | sudo tee /etc/grub.d/25_windows

exec tail -n +3 \$0

menuentry 'Windows' --class os {

recordfail

savedefault

search --no-floppy--fs-uuid --set=root 4D65-646F

chainloader (\${root})/EFI/Microsoft2/Boot/bootmgfw.efi

}

EOF

sudo chmod +x /etc/grub.d/25_windows

echo 'GRUB_RECORDFAIL_TIMEOUT=$GRUB_TIMEOUT' | sudo tee -a /etc/default/grub

sudo sed 's/GRUB_TIMEOUT=0/GRUB_TIMEOUT=1/' /etc/default/grub

sudo update-grub

This will boot Ubuntu by default but allow you to get into Windows as needed. If you would rather have it remember what you booted last. That’s easy enough too with some grub modifications.