Bluetooth on Windows Server 2008

One of things that differs Windows Server 2008 from Windows Vista is lack of bluetooth. For some reason it was decided somewhere is Microsoft that bluetooth is not a feature for server operating system. While I may partly agree that on real server there is no need for bluetooth, one needs to remember that before anything gets installed on production servers, it needs to be tested. Usually that testing is done on any machine that is available. That often is just some old workstation or notebook. Those computers often do have bluetooth and, since testing can last for few months, sooner or later some bluetooth device comes up.

There is option of installing bluetooth stack from your bluetooth manufacturer. But, not only those stacks are little bit overkill for just connecting your mouse, most of devices these days lack the CD with stack altogether. Manufacturers got so accustomed on people having default stack installed that they do not bother even creating custom one. Even those that have stack available (Broadcom, Toshiba…), bury it deep inside of website.

Cheating

Another way to get a bluetooth stack is to use Vista drivers. Although for almost all other drivers there is complete compatibility of drivers (in sense that you can install Vista drivers on 2008 and have them working), bluetooth stack cannot be installed directly. Solution is to adapt procedure a little.

On Windows Vista (yes, we need Vista) go to “C:\Windows\System32\DriverStore\FileRepository” directory and start search for “*bth*” (notice star before bth). That will get you quite a few hits. Just copy everything that appears in one folder (do not keep folder structure) and has .inf, .sys or .exe extension (no need for .pnf files). That will give you quite a few files and with subdirectories’ files included (e.g. fsquirt.exe), you have all files. You do not need to worry about duplicate files. One copy of each file will do.

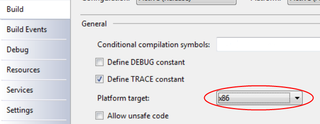

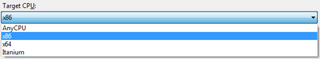

Now you need to edit all .inf files. Just open them all and change any appearance of “NTamd64…1” to “NTamd64…3” (two appearances per file). This tells windows that those files support both desktop and server operating system.

You can go into Windows Server 2008 now and when asked for bluetooth drivers, just give it a path to that directory where all work was done. Drivers will install as normally and you will have your bluetooth working.