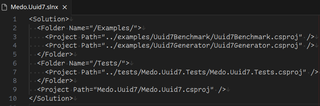

LocoNS

If one checks all the freeware stuff I made over the years, they might notice a theme. They are usually solving problem that only I seemingly have. And yes, this program one of those too.

As many people do, I have most of my internal DNS resolution handled by mDNS. I used to have it done by my router, but over time I moved to encrypted DNS and spinning that one internally seemed like an overkill. So, I just rely on all elements having their mDNS running and all getting auto-magically resolved. For devices that are not capable of resolving mDNS themselves, I use to run Avahi on my main server. Avahi uses my hosts file and thus I avoid having to distribute config to each machine. Except that Avahi doesn’t really understand my hosts file.

Part of an issue is having two different names for the same server. For example, I have main server and its backup with unique name each (vilya and nenya). But I don’t use that name directly. I usually access the active one using common name (ring) that is switched between them as I need to do some work. Usually ring is the same IP as my main server (vilya). But, if I know I am going to do some work, I will redirect it to the backup server (nenya) in order to keep (read-only) access to all the family stuff. Once done, ring just moves back.

And this simple scenario is something Avahi specifically will not do. Avahi allows only one DNS name per IP, no exceptions. And that’s probably how it should be. But that’s not how I want it. So, I built LocoNS.

LocoNS is as dumb as mDNS servers get. By default it will get onto all available interfaces and use hosts file as its source of truth. If there are multiple names for an IP address (as it’s explicitly allowed in hosts file), it will learn all of them. In addition, it will listen to other mDNS traffic and remember where things are. If there is any query, LocoNS will respond immediately.

The whole application is setup so it works with unmodified hosts file and no special configuration should be necessary for it to work. Of course, you can still change functionality. For example, you can define which interfaces you want to use, whether you want to even “learn” from other mDNS server, or even if you want to use hosts file to begin with. But, configuration is kept simple intentionally.

And no, LocoNS is not a full mDNS solution. To start with, it only supports A and AAAA records. Its intention is to be only a supporting element that will solve one issue mDNS doesn’t usually solve for me.

If this peaked your curiosity, download is available on its page. You can download either AppImage, Debian package, or a docker image. And yes, I know there is no Windows download. While LocoNS will work under Windows, I am just too lazy to make it into a service. I guess I might, if enough people scream at me. Chances are, that probably won’t happen.

If this all sounds as problem you also need solved, do check it out.