Testing Native ZFS Encryption Speed (Ubuntu 22.04)

[2022-10-30: There is a newer version of this post]

With the new Ubuntu LTS release, it came time to repeat my ZFS encryption testing. Is ZFS speed better, worse, or the same?

I won’t go into the test procedure much since I explained it back when I did it the first time. Outside of really minor differences in the exact disk size, procedure didn’t change. What did change is that I am not doing it on virtual machine anymore.

These tests I did on Framework laptop with i5-1135G7 processor and 32GB of RAM. It’s a bit more consistent setup than the virtual machine I used before. Due to this change, numbers are not really comparable to ones from previous tests but that should be fine - our main interest is in the relative numbers.

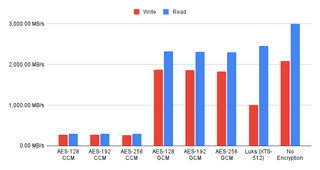

First of all, we can see that CCM encryption is not worth a dime if you have any AES-capable processor. Difference between CCM and any other encryption I tested is huge with CCM being 5-6 times slower. Only once I turned off the AES support in BIOS does its inclusion make even a minimal sense as this actually improves its performance. And no, it doesn’t suck less - it’s just that all other encryption methods suck more.

Assuming our machine has a processor made in the last 5 or so years, the native ZFS GCM encryption becomes the clear winner. Yes, 128-bit variant is a bit faster than 256-bit one (as expected) but difference is small enough that it probably wont matter. What will matter is that any GCM wins over LUKS. Yes, reads are slightly faster using standard XTS LUKS but writes are clearly favoring the native ZFS encryption.

Unless you really need the ultimate cryptographic opacity a LUKS encryption brings, a native ZFS encryption using GCM is still a way to go. And yes, even though GCM modes are performant, we still lose about 10-15% in writes and about 30% on reads when compared to no encryption at all. Mind you, as with all synthetic tests giving you the worst figures, the real performance loss is much lower.

Make what you want of it, but I’ll keep encrypting my drives. They’re plenty fast.

PS: You can take a peek at the raw data if you’re so inclined.